Simulate \(n\) independent and identically distributed random vectors from the \(d\)-dimensional \(N(0,\Sigma)\) distribution (zero-mean normal with covariance \(\Sigma\)) conditional on \(l<X<u\). Infinite values for \(l\) and \(u\) are accepted.

mvrandn(l, u, Sig, n, mu = NULL)

Arguments

| l | lower truncation limit |

|---|---|

| u | upper truncation limit |

| Sig | covariance matrix |

| n | number of simulated vectors |

| mu | location parameter |

Value

a \(d\) by \(n\) matrix storing the random vectors, \(X\), drawn from \(N(0,\Sigma)\), conditional on \(l<X<u\);

Details

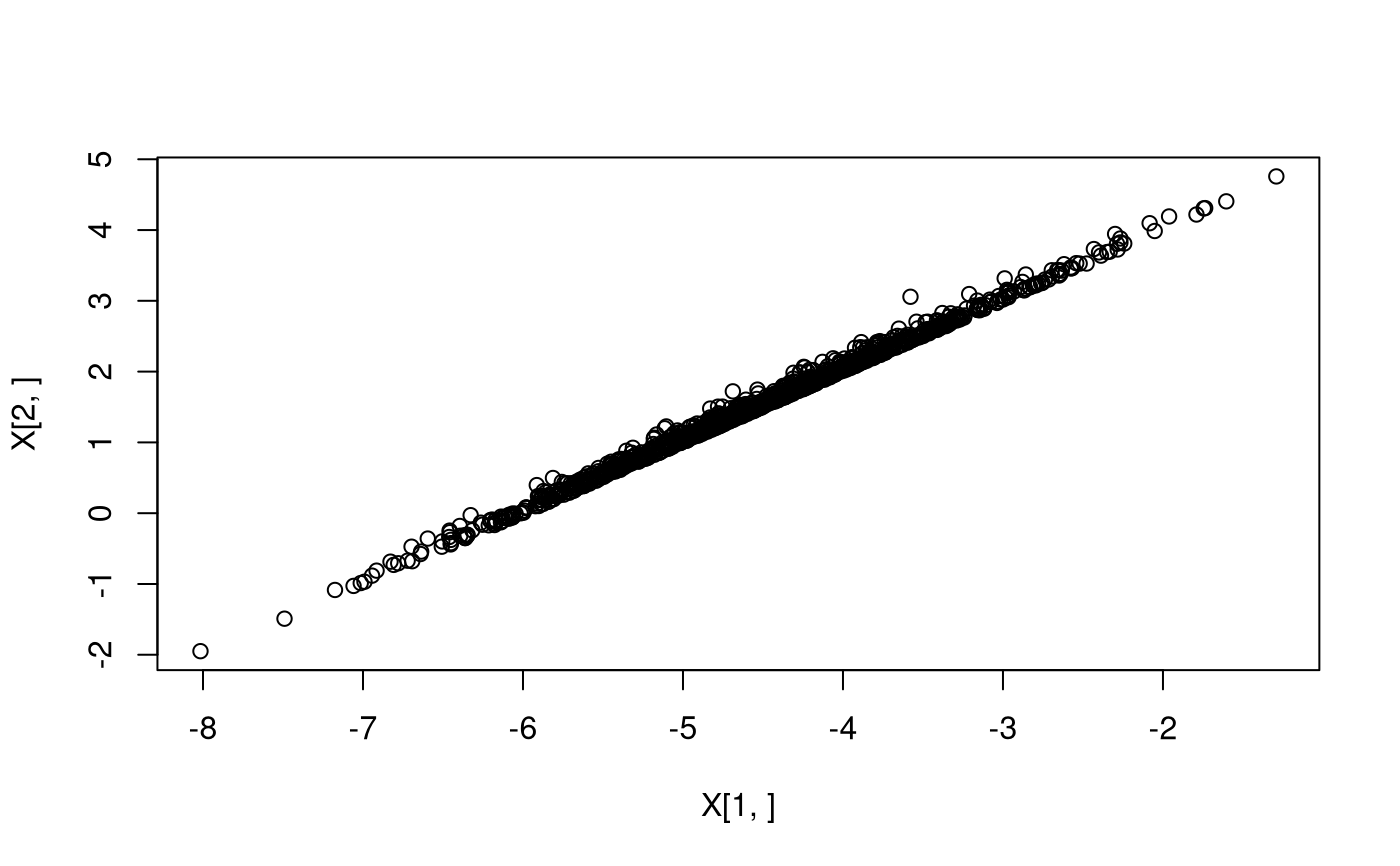

Bivariate normal: Suppose we wish to simulate a bivariate \(X\) from \(N(\mu,\Sigma)\), conditional on \(X_1-X_2<-6\). We can recast this as the problem of simulation of \(Y\) from \(N(0,A\Sigma A^\top)\) (for an appropriate matrix \(A\)) conditional on \(l-A\mu < Y < u-A\mu\) and then setting \(X=\mu+A^{-1}Y\). See the example code below.

Exact posterior simulation for Probit regression:Consider the Bayesian Probit Regression model applied to the

lupusdataset. Let the prior for the regression coefficients \(\beta\) be \(N(0,\nu^2 I)\). Then, to simulate from the Bayesian posterior exactly, we first simulate \(Z\) from \(N(0,\Sigma)\), where \(\Sigma=I+\nu^2 X X^\top,\) conditional on \(Z\ge 0\). Then, we simulate the posterior regression coefficients, \(\beta\), of the Probit regression by drawing \((\beta|Z)\) from \(N(C X^\top Z,C)\), where \(C^{-1}=I/\nu^2+X^\top X\). See the example code below.

Note

The algorithm may not work or be very inefficient if \(\Sigma\) is close to being rank deficient.

See also

Examples

# Bivariate example. Sig <- matrix(c(1,0.9,0.9,1), 2, 2); mu <- c(-3,0); l <- c(-Inf,-Inf); u <- c(-6,Inf); A <- matrix(c(1,0,-1,1),2,2); n <- 1e3; # number of sampled vectors Y <- mvrandn(l - A %*% mu, u - A %*% mu, A %*% Sig %*% t(A), n); X <- rep(mu, n) + solve(A, diag(2)) %*% Y; # now apply the inverse map as explained above plot(X[1,], X[2,]) # provide a scatterplot of exactly simulated pointsif (FALSE) { # Exact Bayesian Posterior Simulation Example. data("lupus"); # load lupus data Y = lupus[,1]; # response data X = lupus[,-1] # construct design matrix m=dim(X)[1]; d=dim(X)[2]; # dimensions of problem X=diag(2*Y-1) %*%X; # incorporate response into design matrix nu=sqrt(10000); # prior scale parameter C=solve(diag(d)/nu^2+t(X)%*%X); L=t(chol(t(C))); # lower Cholesky decomposition Sig=diag(m)+nu^2*X %*% t(X); # this is covariance of Z given beta l=rep(0,m);u=rep(Inf,m); est=mvNcdf(l,u,Sig,1e3); # estimate acceptance probability of Crude Monte Carlo print(est$upbnd/est$prob) # estimate the reciprocal of acceptance probability n=1e4 # number of iid variables z=mvrandn(l,u,Sig,n); # sample exactly from auxiliary distribution beta=L %*% matrix(rnorm(d*n),d,n)+C %*% t(X) %*% z; # simulate beta given Z and plot boxplots of marginals boxplot(t(beta)) # plot the boxplots of the marginal # distribution of the coefficients in beta print(rowMeans(beta)) # output the posterior means }