# Use ANOVA table to compute etasquared effect size

(etasq <- 0.0359/(23.1175 + 1.2355 + 0.0359))[1] 0.00147efs <- effectsize::eta_squared(

model_block,

partial = TRUE,

generalized = "subject")library(emmeans)

library(hecedsm)

library(ggplot2)

library(afex)

library(lmerTest)

# Force sum-to-zero parametrization for unordered factors

options(contrasts = c("contr.sum", "contr.poly"))

## Blocking factor

# Note that this is fundamentally repeated measures

url <- "https://edsm.rbind.io/files/data/resting_metabolic_rate.txt"

# transform integers to factors (categorical)

resting <- read.table(url, header = TRUE) |>

dplyr::mutate(

subject = factor(subject), #blocking factor

protocol = factor(protocol), #experimental factor

rate = rate/1000)

# Fit model with blocking factor

model_block <- aov(rate ~ subject + protocol, data = resting)

# One-way ANOVA (no blocking)

model_raw <- aov(rate ~ protocol, data = resting)

# ANOVA tables with and without blocking factor

anova(model_block) |>

knitr::kable()

anova(model_raw) |>

knitr::kable()| Df | Sum Sq | Mean Sq | F value | Pr(>F) | |

|---|---|---|---|---|---|

| subject | 8 | 23.117 | 2.890 | 37.423 | 0.000 |

| protocol | 2 | 0.036 | 0.018 | 0.233 | 0.795 |

| Residuals | 16 | 1.235 | 0.077 |

| Df | Sum Sq | Mean Sq | F value | Pr(>F) | |

|---|---|---|---|---|---|

| protocol | 2 | 0.036 | 0.018 | 0.018 | 0.982 |

| Residuals | 24 | 24.353 | 1.015 |

[1] 0.00147# Interaction plot

ggplot(data = resting,

aes(x = subject,

y = rate,

group = protocol,

color = protocol)) +

geom_line(linewidth = 1.5) +

labs(subtitle = "mean resting metabolic rate",

y = "",

x = "subject identifier") +

scale_color_grey()+

theme_classic() +

theme(legend.position = "bottom")

The purpose of this is to show the equivalence of repeated measures and mixed models for tests of interest in balanced designs with a single repeated measurement per individual, here captured through individual-specific random effects.

We consider three different models for imscore as a function of waiting (between-subject factor) and ratingtype (within-subject factor). Each individual id gives two ratings. The id are nested withing waiting, but crossed with ratingtype.

imscore by ratingtype as response,id and both waiting, ratingtype and their interaction.data(HOSM22_E3, package = 'hecedsm')

# Pivot from long to wide for MANOVA

HOSM22_E3w <- HOSM22_E3 |> tidyr::pivot_wider(

names_from = ratingtype,

values_from = imscore

)

xtabs(~ratingtype + waiting, HOSM22_E3) |>

knitr::kable() # crossed factors

head(xtabs(~id + waiting, HOSM22_E3), n = 5) |>

knitr::kable(row.names = TRUE) # id nested in waiting

head(xtabs(~id + ratingtype, HOSM22_E3), n = 5) |>

knitr::kable(row.names = TRUE)| long | short | |

|---|---|---|

| experience | 32 | 31 |

| prediction | 32 | 31 |

| long | short | |

|---|---|---|

| 1 | 0 | 2 |

| 2 | 2 | 0 |

| 3 | 2 | 0 |

| 4 | 0 | 2 |

| 5 | 2 | 0 |

| experience | prediction | |

|---|---|---|

| 1 | 1 | 1 |

| 2 | 1 | 1 |

| 3 | 1 | 1 |

| 4 | 1 | 1 |

| 5 | 1 | 1 |

# Fit instead the model with MANOVA using multivariate linear regression

manova_mod <- lm(cbind(prediction, experience) ~ waiting,

data = HOSM22_E3w)

# For repeated measures, we need to reconstruct the missing factor(s)

# corresponding to the repeated measures via a data frame (idata)

# that contains the factor levels and the variable name

# and idesign that includes the additional factors to our models

car::Anova(manova_mod,

idata = data.frame(

ratingtype = factor(c("prediction","experience"))),

idesign =~ratingtype, type = 3)

Type III Repeated Measures MANOVA Tests: Pillai test statistic

Df test stat approx F num Df den Df Pr(>F)

(Intercept) 1 0.893 508 1 61 < 2e-16 ***

waiting 1 0.156 11 1 61 0.0014 **

ratingtype 1 0.387 38 1 61 5.4e-08 ***

waiting:ratingtype 1 0.001 0 1 61 0.7757

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1# Fitting the same 2x2 model (with interaction), including a random intercept per subject

library(lmerTest)

mixmod <- lmer(

imscore ~ waiting*ratingtype + (1 | id),

data = HOSM22_E3)

# We get the same table if we set type III

anova(mixmod, type = 2)Type II Analysis of Variance Table with Satterthwaite's method

Sum Sq Mean Sq NumDF DenDF F value Pr(>F)

waiting 7.76 7.76 1 61 11.26 0.0014 **

ratingtype 26.47 26.47 1 61 38.39 5.5e-08 ***

waiting:ratingtype 0.06 0.06 1 61 0.08 0.7757

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1This is an incomplete block design: there are two (counterbalanced) combinations of anchor, vignette, and verdictsyst, but participants see 2 out of 8 combinations. Hence, no interaction between these and ID is possible.

# A tibble: 6 × 7

anchor vignette id vorder verdictsyst guilt pjaq

<fct> <fct> <fct> <fct> <fct> <dbl> <dbl>

1 strong-first 1 1 1 two 104. 67

2 strong-first 1 2 1 two 81.8 101

3 strong-first 1 3 1 two 87.8 65

4 strong-first 1 4 1 two 82.7 60

5 strong-first 1 5 1 two 76.8 75

6 strong-first 1 6 1 two 96.2 73options(contrasts = c("contr.sum", "contr.poly"))

# balanced!

xtabs(~ anchor + vignette + verdictsyst, data = C22), , verdictsyst = two

vignette

anchor 1 2

weak-first 32 32

strong-first 32 32

, , verdictsyst = three

vignette

anchor 1 2

weak-first 32 32

strong-first 32 32 id

interaction(anchor, vignette, verdictsyst) 1 2 3 4 5

weak-first.1.two 0 0 0 0 0

strong-first.1.two 1 1 1 1 1

weak-first.2.two 0 0 0 0 0

strong-first.2.two 0 0 0 0 0

weak-first.1.three 0 0 0 0 0

strong-first.1.three 0 0 0 0 0

weak-first.2.three 1 1 1 1 1

strong-first.2.three 0 0 0 0 0model <- lmer(

guilt ~ anchor*vignette*verdictsyst + pjaq + (1|id),

data = C22)

# pjaq is a covariate (so used to reduce error, plus the slope is of interest on it's own

# Cannot have interaction pjaq * id, because we get a single pjaq score per person

# No ambiguity for sum of square decomposition

anova(model)Type III Analysis of Variance Table with Satterthwaite's method

Sum Sq Mean Sq NumDF DenDF F value Pr(>F)

anchor 1601 1601 1 124 7.49 0.0071 **

vignette 2251 2251 1 124 10.53 0.0015 **

verdictsyst 296 296 1 124 1.39 0.2413

pjaq 4277 4277 1 123 20.00 1.7e-05 ***

anchor:vignette 4 4 1 123 0.02 0.8959

anchor:verdictsyst 3 3 1 123 0.01 0.9028

vignette:verdictsyst 1238 1238 1 123 5.79 0.0176 *

anchor:vignette:verdictsyst 4 4 1 124 0.02 0.8945

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1# No three-way interaction

# A two-way interaction between vignette:verdictsyst

# Computing differences between anchors

emmeans(model, specs = "anchor") |> pairs() contrast estimate SE df t.ratio p.value

(weak-first) - (strong-first) 5 1.83 124 2.736 0.0071

Results are averaged over the levels of: vignette, verdictsyst

Degrees-of-freedom method: kenward-roger # Computing differences in verdict separately for each vignette

emmeans(model, specs = "verdictsyst", by = "vignette") |> pairs()vignette = 1:

contrast estimate SE df t.ratio p.value

two - three 11.1 4.14 179 2.678 0.0081

vignette = 2:

contrast estimate SE df t.ratio p.value

two - three -6.8 4.14 179 -1.640 0.1028

Results are averaged over the levels of: anchor

Degrees-of-freedom method: kenward-roger book.url <- "http://stat.ethz.ch/~meier/teaching/book-anova"

chocolate <- read.table(file.path(book.url, "data/chocolate.dat"),

header = TRUE)

chocolate[,"rater"] <- factor(chocolate[,"rater"])

chocolate[,"background"] <- factor(chocolate[,"background"])

str(chocolate)'data.frame': 160 obs. of 4 variables:

$ choc : chr "A" "A" "B" "B" ...

$ rater : Factor w/ 10 levels "1","2","3","4",..: 1 1 1 1 1 1 1 1 2 2 ...

$ background: Factor w/ 2 levels "rural","urban": 1 1 1 1 1 1 1 1 1 1 ...

$ y : int 61 64 46 45 63 66 59 56 65 69 ...# Fit the model (note that rater recycles the id 1:10, so we need to be careful here!

chocolate <- chocolate |> dplyr::mutate(id = factor(paste(background, rater)))

# This model is correct

model <- lmer(y ~ background*choc +

(1 | rater:background) + (1 | rater:choc:background),

data = chocolate)

# This is fine too (because of the distinct IDs)

model <- lmer(y ~ background*choc +

(1 | id) + (1 | choc:id),

data = chocolate)

# This is WRONG (compare degrees of freedom)

# model <- lmer(y ~ background*choc +

# (1 | rater) + (1 | choc:rater),

# data = chocolate)

# Data are again balanced

anova(model)Type III Analysis of Variance Table with Satterthwaite's method

Sum Sq Mean Sq NumDF DenDF F value Pr(>F)

background 263 263 1 18 27.61 5.4e-05 ***

choc 4219 1406 3 54 147.74 < 2e-16 ***

background:choc 64 21 3 54 2.24 0.094 .

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1# There is a no evidence of interaction

# The model output includes variance coefficients

summary(model)Linear mixed model fit by REML. t-tests use Satterthwaite's method [

lmerModLmerTest]

Formula: y ~ background * choc + (1 | id) + (1 | choc:id)

Data: chocolate

REML criterion at convergence: 921

Scaled residuals:

Min 1Q Median 3Q Max

-2.2380 -0.4833 -0.0244 0.4950 2.2997

Random effects:

Groups Name Variance Std.Dev.

choc:id (Intercept) 9.42 3.07

id (Intercept) 19.63 4.43

Residual 9.52 3.09

Number of obs: 160, groups: choc:id, 80; id, 20

Fixed effects:

Estimate Std. Error df t value Pr(>|t|)

(Intercept) 70.819 1.076 18.000 65.79 < 2e-16 ***

background1 -5.656 1.076 18.000 -5.25 5.4e-05 ***

choc1 5.431 0.729 54.000 7.45 7.7e-10 ***

choc2 -14.844 0.729 54.000 -20.35 < 2e-16 ***

choc3 7.881 0.729 54.000 10.81 4.1e-15 ***

background1:choc1 -0.394 0.729 54.000 -0.54 0.59

background1:choc2 -0.519 0.729 54.000 -0.71 0.48

background1:choc3 -0.944 0.729 54.000 -1.29 0.20

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Correlation of Fixed Effects:

(Intr) bckgr1 choc1 choc2 choc3 bck1:1 bck1:2

background1 0.000

choc1 0.000 0.000

choc2 0.000 0.000 -0.333

choc3 0.000 0.000 -0.333 -0.333

bckgrnd1:c1 0.000 0.000 0.000 0.000 0.000

bckgrnd1:c2 0.000 0.000 0.000 0.000 0.000 -0.333

bckgrnd1:c3 0.000 0.000 0.000 0.000 0.000 -0.333 -0.333 choc emmean SE df lower.CL upper.CL

A 76.2 1.3 35.8 73.6 78.9

B 56.0 1.3 35.8 53.3 58.6

C 78.7 1.3 35.8 76.1 81.3

D 72.3 1.3 35.8 69.7 75.0

Results are averaged over the levels of: background

Degrees-of-freedom method: kenward-roger

Confidence level used: 0.95 contrast estimate SE df t.ratio p.value

A - B 20.27 1.19 54 17.030 <.0001

A - C -2.45 1.19 54 -2.060 0.1803

A - D 3.90 1.19 54 3.270 0.0097

B - C -22.72 1.19 54 -19.080 <.0001

B - D -16.37 1.19 54 -13.750 <.0001

C - D 6.35 1.19 54 5.330 <.0001

Results are averaged over the levels of: background

Degrees-of-freedom method: kenward-roger

P value adjustment: tukey method for comparing a family of 4 estimates # C has the highest rating, but indistinguishable from A

# B is worst

# Compare variability - extract variance from model

(vars <- c(unlist(VarCorr(model)), sigma(model)^2))choc:id id

9.42 19.63 9.52 # Compare these with the output of summary

# Correlation between same chocolate/rater

sum(vars[1:2])/sum(vars)[1] 0.753 id

0.509 ---

title: "MATH 80667A - Week 10"

author: "Léo Belzile"

format: html

eval: true

echo: true

message: false

warning: false

code-tools:

source: true

toggle: false

caption: "Download Quarto file"

---

```{r}

#| echo: false

options(digits = 3, knitr.kable.NA = '')

```

# Blocking factor

```{r}

#| label: tbl-anova-blocking

#| tbl-cap: "Analysis of variance table"

#| tbl-subcaption: ["with blocking factor","without the blocking factor"]

#| layout-ncol: 2

library(emmeans)

library(hecedsm)

library(ggplot2)

library(afex)

library(lmerTest)

# Force sum-to-zero parametrization for unordered factors

options(contrasts = c("contr.sum", "contr.poly"))

## Blocking factor

# Note that this is fundamentally repeated measures

url <- "https://edsm.rbind.io/files/data/resting_metabolic_rate.txt"

# transform integers to factors (categorical)

resting <- read.table(url, header = TRUE) |>

dplyr::mutate(

subject = factor(subject), #blocking factor

protocol = factor(protocol), #experimental factor

rate = rate/1000)

# Fit model with blocking factor

model_block <- aov(rate ~ subject + protocol, data = resting)

# One-way ANOVA (no blocking)

model_raw <- aov(rate ~ protocol, data = resting)

# ANOVA tables with and without blocking factor

anova(model_block) |>

knitr::kable()

anova(model_raw) |>

knitr::kable()

```

```{r}

# Use ANOVA table to compute etasquared effect size

(etasq <- 0.0359/(23.1175 + 1.2355 + 0.0359))

efs <- effectsize::eta_squared(

model_block,

partial = TRUE,

generalized = "subject")

```

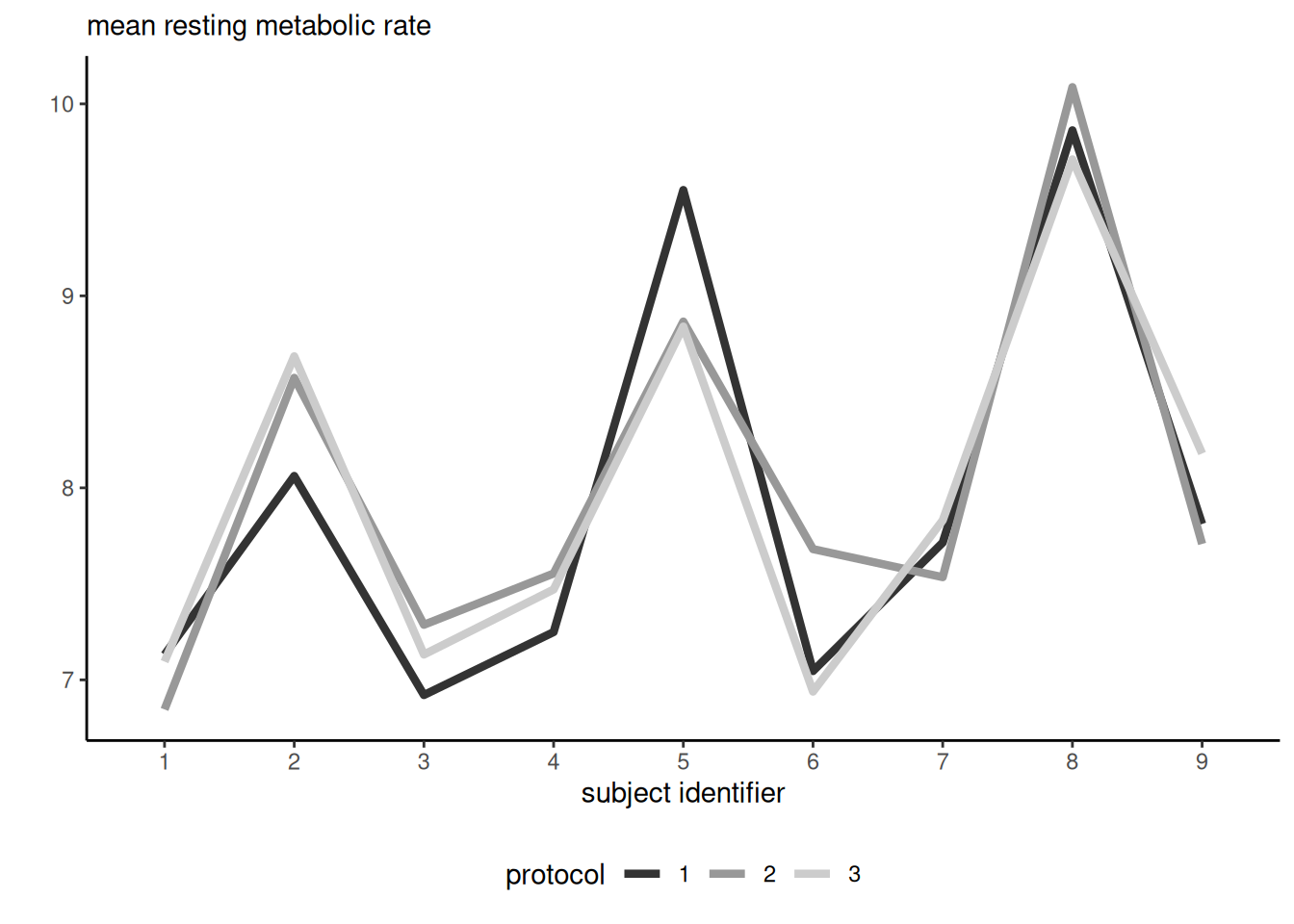

```{r}

#| label: fig-interaction-metabolic

#| fig-cap: "Interaction plot"

# Interaction plot

ggplot(data = resting,

aes(x = subject,

y = rate,

group = protocol,

color = protocol)) +

geom_line(linewidth = 1.5) +

labs(subtitle = "mean resting metabolic rate",

y = "",

x = "subject identifier") +

scale_color_grey()+

theme_classic() +

theme(legend.position = "bottom")

```

# Mixed model

## Hosano et al. (2022)

The purpose of this is to show the equivalence of repeated measures and mixed models for tests of interest

in balanced designs with a single repeated measurement per individual, here captured through individual-specific random effects.

We consider three different models for `imscore` as a function of `waiting` (between-subject factor) and `ratingtype` (within-subject factor). Each individual `id` gives two ratings. The `id` are nested withing `waiting`, but crossed with `ratingtype`.

- a mixed ANOVA (within-between) full-factorial design (i.e., including the interaction term),

- a MANOVA with the two measurements of `imscore` by `ratingtype` as response,

- a linear mixed model with a random effect for `id` and both `waiting`, `ratingtype` and their interaction.

```{r}

#| layout-ncol: 3

data(HOSM22_E3, package = 'hecedsm')

# Pivot from long to wide for MANOVA

HOSM22_E3w <- HOSM22_E3 |> tidyr::pivot_wider(

names_from = ratingtype,

values_from = imscore

)

xtabs(~ratingtype + waiting, HOSM22_E3) |>

knitr::kable() # crossed factors

head(xtabs(~id + waiting, HOSM22_E3), n = 5) |>

knitr::kable(row.names = TRUE) # id nested in waiting

head(xtabs(~id + ratingtype, HOSM22_E3), n = 5) |>

knitr::kable(row.names = TRUE)

```

```{r}

mod <- aov_ez(id = "id",

dv = "imscore",

between = "waiting",

within = "ratingtype",

data = HOSM22_E3)

# Obtain MANOVA table

MANOVA_tab <- mod$Anova # same as below

```

```{r}

#| label: tbl-MANOVA

#| tbl-cap: "Type III Repeated Measures MANOVA Tests: Pillai test statistic"

# Fit instead the model with MANOVA using multivariate linear regression

manova_mod <- lm(cbind(prediction, experience) ~ waiting,

data = HOSM22_E3w)

# For repeated measures, we need to reconstruct the missing factor(s)

# corresponding to the repeated measures via a data frame (idata)

# that contains the factor levels and the variable name

# and idesign that includes the additional factors to our models

car::Anova(manova_mod,

idata = data.frame(

ratingtype = factor(c("prediction","experience"))),

idesign =~ratingtype, type = 3)

```

```{r}

# Fitting the same 2x2 model (with interaction), including a random intercept per subject

library(lmerTest)

mixmod <- lmer(

imscore ~ waiting*ratingtype + (1 | id),

data = HOSM22_E3)

# We get the same table if we set type III

anova(mixmod, type = 2)

```

## Curley et al.

This is an incomplete block design: there are two (counterbalanced) combinations of `anchor`, `vignette`, and `verdictsyst`, but participants see 2 out of 8 combinations. Hence, no interaction between these and ID is possible.

```{r}

data(C22, package = "hecedsm")

head(C22)

options(contrasts = c("contr.sum", "contr.poly"))

# balanced!

xtabs(~ anchor + vignette + verdictsyst, data = C22)

xtabs(~ interaction(anchor, vignette, verdictsyst) + id, data = C22)[,1:5]

model <- lmer(

guilt ~ anchor*vignette*verdictsyst + pjaq + (1|id),

data = C22)

# pjaq is a covariate (so used to reduce error, plus the slope is of interest on it's own

# Cannot have interaction pjaq * id, because we get a single pjaq score per person

# No ambiguity for sum of square decomposition

anova(model)

# No three-way interaction

# A two-way interaction between vignette:verdictsyst

# Computing differences between anchors

emmeans(model, specs = "anchor") |> pairs()

# Computing differences in verdict separately for each vignette

emmeans(model, specs = "verdictsyst", by = "vignette") |> pairs()

```

## Chocolate rating

```{r}

book.url <- "http://stat.ethz.ch/~meier/teaching/book-anova"

chocolate <- read.table(file.path(book.url, "data/chocolate.dat"),

header = TRUE)

chocolate[,"rater"] <- factor(chocolate[,"rater"])

chocolate[,"background"] <- factor(chocolate[,"background"])

str(chocolate)

# Fit the model (note that rater recycles the id 1:10, so we need to be careful here!

chocolate <- chocolate |> dplyr::mutate(id = factor(paste(background, rater)))

# This model is correct

model <- lmer(y ~ background*choc +

(1 | rater:background) + (1 | rater:choc:background),

data = chocolate)

# This is fine too (because of the distinct IDs)

model <- lmer(y ~ background*choc +

(1 | id) + (1 | choc:id),

data = chocolate)

# This is WRONG (compare degrees of freedom)

# model <- lmer(y ~ background*choc +

# (1 | rater) + (1 | choc:rater),

# data = chocolate)

# Data are again balanced

anova(model)

# There is a no evidence of interaction

# The model output includes variance coefficients

summary(model)

# Look at best chocolate type overall

(emm <- emmeans(model, specs = "choc"))

emm |> contrast("pairwise")

# C has the highest rating, but indistinguishable from A

# B is worst

# Compare variability - extract variance from model

(vars <- c(unlist(VarCorr(model)), sigma(model)^2))

# Compare these with the output of summary

# Correlation between same chocolate/rater

sum(vars[1:2])/sum(vars)

# Correlation between measurements from same rater, different chocolates

vars[2]/sum(vars)

```