Using open data to teach reproducibility

SSC Annual meeting

Wednesday, May 28, 2025

Background information

I teach “Experimental Design and Statistical Methods” to PhD students in management sciences, coming from

- marketing,

- organization behaviour and human ressources,

- user experience,

- information technology, etc.

Students have heterogeneous background in terms of both statistics knowledge and programming skills.

Reproducibility crisis

The garden of forking paths

- Change of paradigm and generational change: my students supervisor have not been trained to do work properly, we need to form the students to adapt to the evolving landscape.

- Good practices (preregistration, sharing code and data, transparency) help alleviate reproducibility problems.

- New easy-to-use tools out there to help researchers (e.g., ResearchBox).

- Instructors can leverage these to generate learning opportunities!

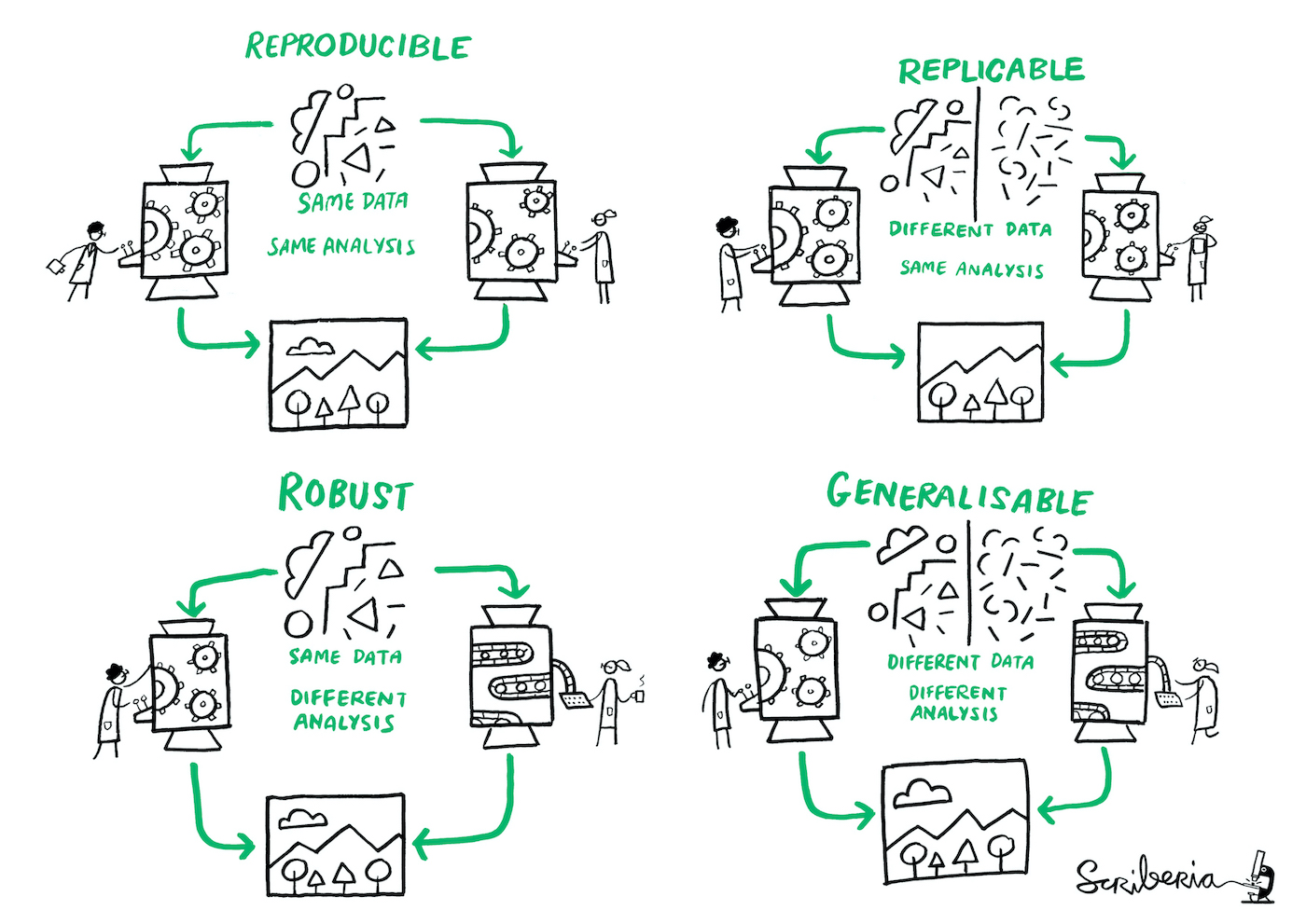

Defining reproducibility

Definition of different dimensions of reproducible research (from The Turing Way project, illustration by Scriberia).

Ingredients for reproducible research

Mandatory

- Raw or cleaned data

- Description of exclusion rules

- Methodological details:

- models

- variables

- statistics (coefficients, standard errors, degrees of freedom, \(p\)-values, effect size, confidence intervals).

Optional

- Preregistration report

- Questionnaires, etc.

- Code

- Descriptive statistics (sample size, etc.)

Details may be hidden in code (also via comments) and in the supplementary material.

How to find open data

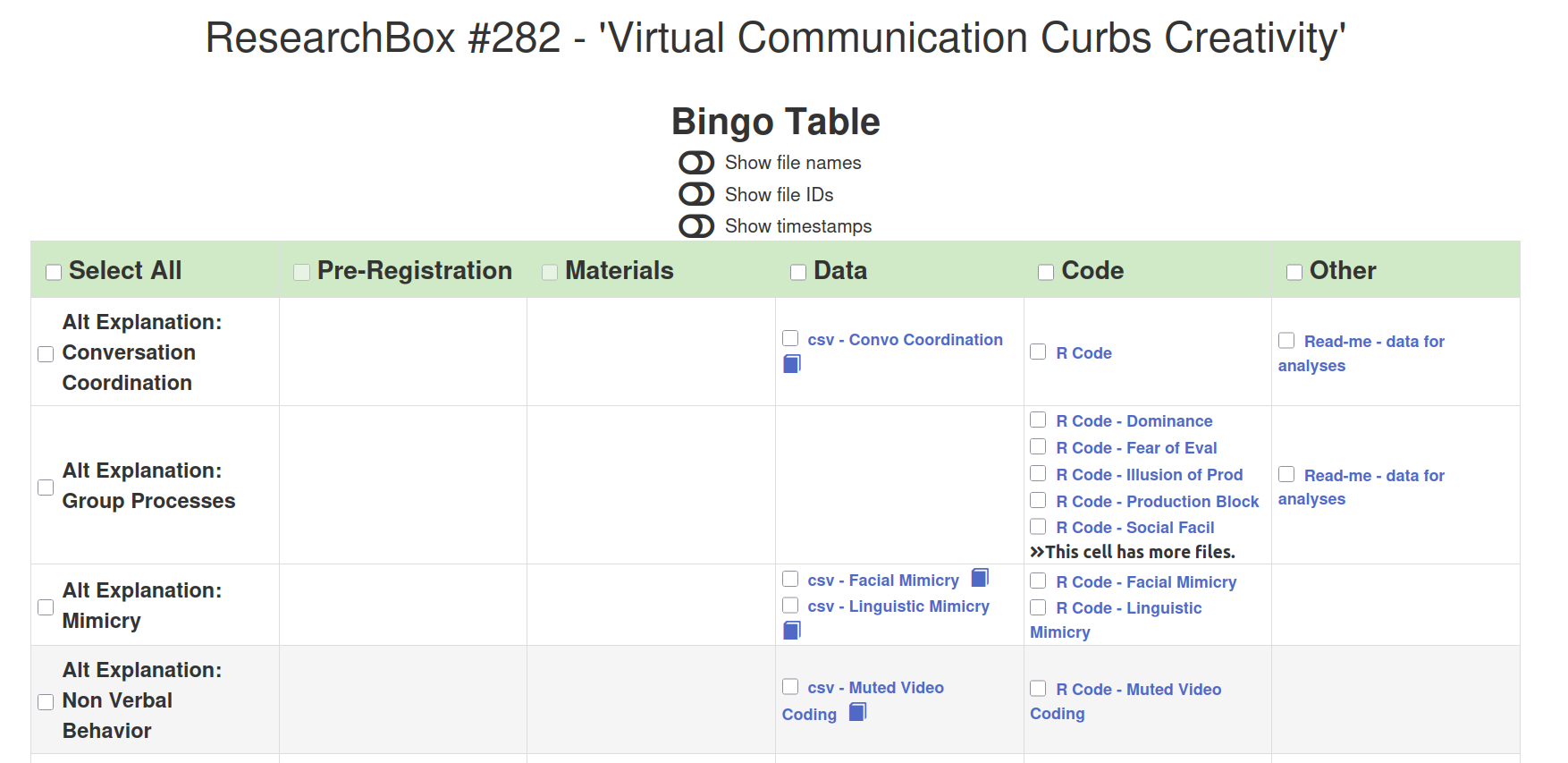

- Data drawn from Open Science Foundation (OSF) or ResearchBox

- Use search with keywords (abstract, or code)

Criteria for selecting papers

Papers should cover a broad variety of fields

- interesting story or research question

- good experimental design

- course material overlap!

- enough details for reproducibility

For my course, diversity of topics and fields is important.

Sharing open-access data

All datasets are preprocessed are bundled via an R package with documentation (with online page).

- Data cleaning is an essential tool, but too big a hurdle for my audience for anything but simple wrangling

- Some papers provide cleaning script, but

- the code is often messy,

- different softwares are used and

- package obsolescence (tidyverse) complicates things.

Activities based on published papers

- Presentation of case studies in class

- Discussion and criticism of methodological and results section

- Weekly assignments (reproducibility)

- Peer-review project in teams

Lesson plan

- Recap of previous session, and problem set review

- Story time: describe the study for ‘motivation’

- Substantive content (methods, concepts)

- Reproducing results (workbook with code and conclusions, versus the paper)

- Annotated papers with comments and criticism

Peer-reviewing task

- Students learn by example, not by osmosis

- Show them the good, the bad and the ugly.

- Compare code with the paper, if available:

- what is omitted?

- do results and descriptions match?

What we don’t (often) teach

- How to do randomization (especially for repeated measures)

- How to choose the response variable

- How to clean the data

- How to make effective data visualization (dynamite plots)!

- How to define sensible exclusion criteria

- How to report conclusions

We can learn or teach these from (counter)examples drawn from published papers.

Opportunities for in-class discussion

For example, consider data collection

- Discuss with students the reliability of data collected on Prolifics and Amazon M’Turk (external validity?)

- Randomization and stratification: the tools must relate to the software used for data collection (e.g., Qualtrics)

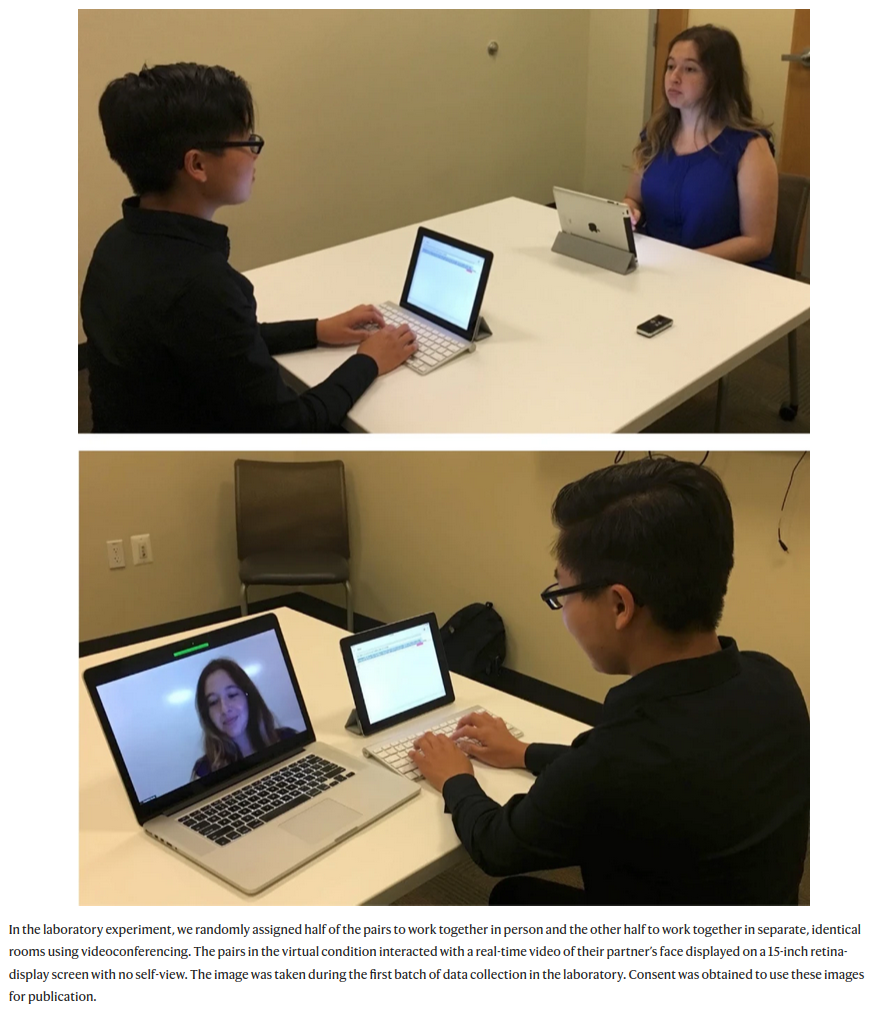

1. Impact of videoconferencing on idea generation

Brucks and Levav (2022)

In a laboratory study […] we demonstrate that videoconferencing hampers idea generation because it focuses communicators on a screen, which prompts a narrower cognitive focus. Our results suggest that virtual interaction comes with a cognitive cost for creative idea generation.

(Subjective) measurement of the number of creative ideas, variety of models that can be fit, comparing different tests.

- Model: Linear mixed model, negative binomial regression

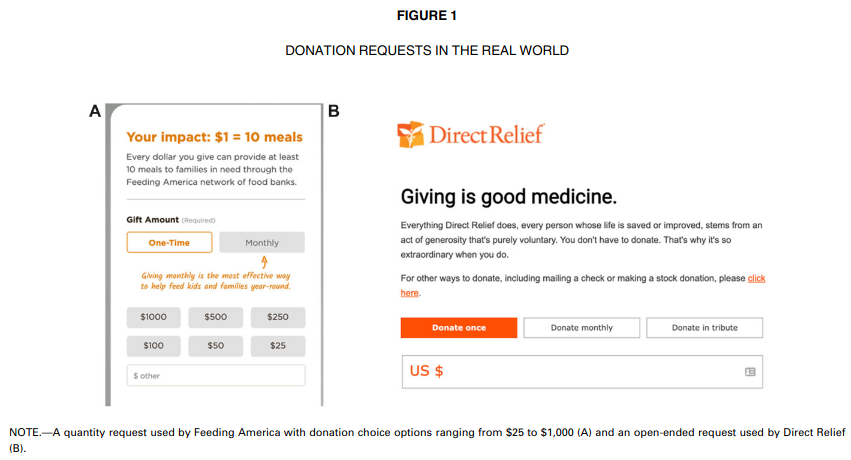

2. Suggesting amounts for donations

Moon and VanEpps (2023)

Across seven studies, we provide evidence that quantity requests, wherein people consider multiple choice options of how much to donate (e.g., $5, $10, or $15), increase contributions compared to open-ended requests.

Our findings offer new conceptual insights into how quantity requests increase contributions as well as practical implications for charitable organizations to optimize contributions by leveraging the use of quantity requests.

Model: Tobit type II regression and Poisson regression (independence test)

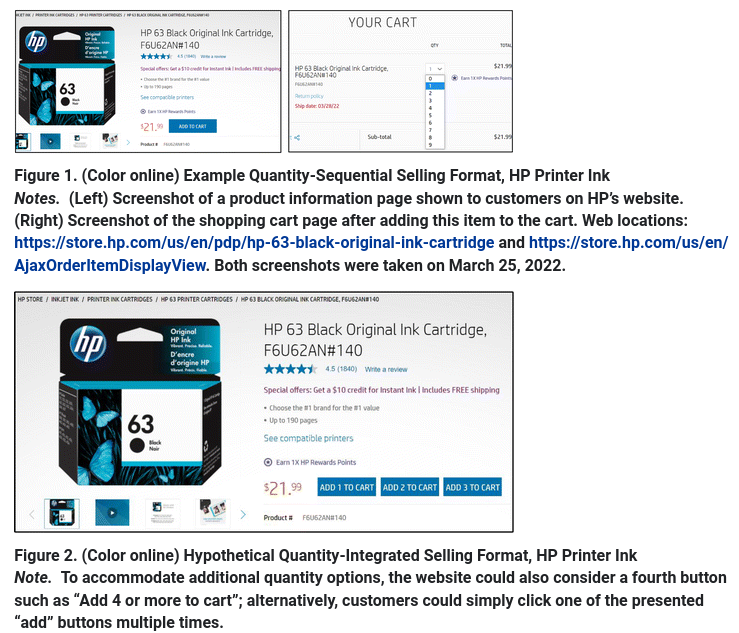

3. Integrated decisions in online shopping

Duke and Amir (2023)

Customers must often decide on the quantity to purchase in addition to whether to purchase. The current research introduces and compares the quantity-sequential selling format, in which shoppers resolve the purchase and quantity decisions separately, with the quantity-integrated selling format, where shoppers simultaneously consider whether and how many to buy. Although retailers often use the sequential format, we demonstrate that the integrated format can increase purchase rates.

Model: logistic regression.

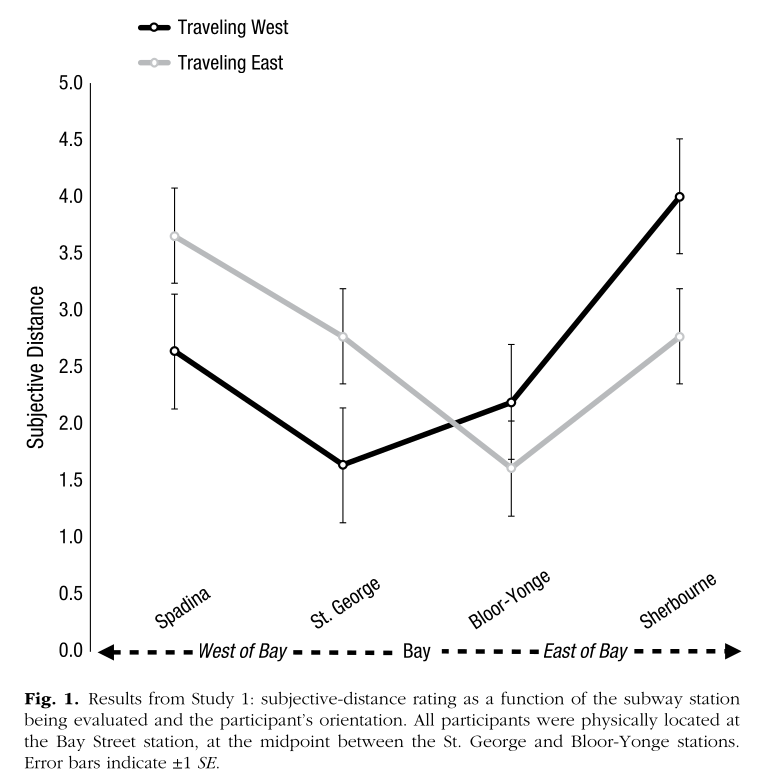

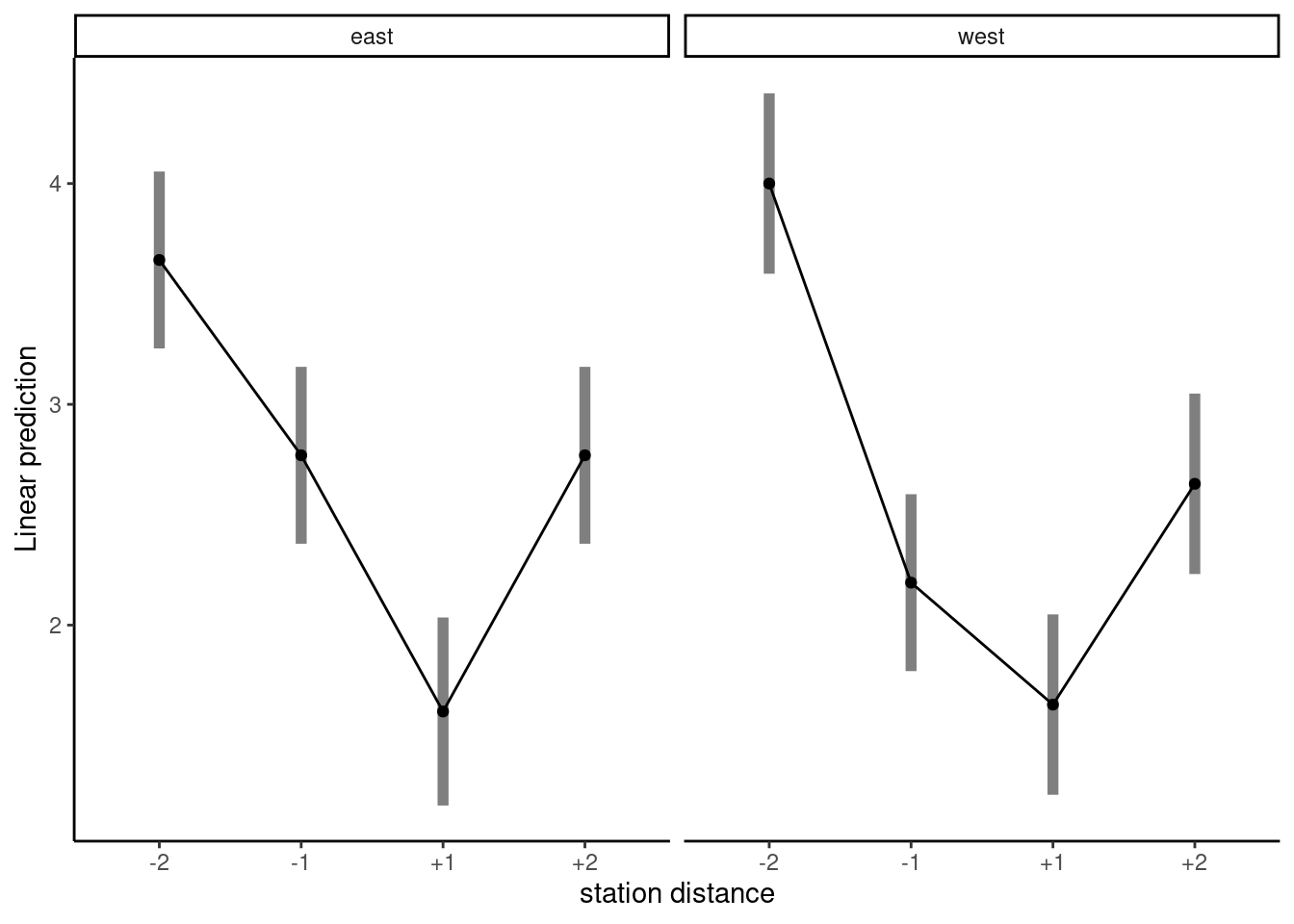

4. Subjective perception of distance

Maglio and Polman (2014) postulate that “spatial orientation toward (vs. away from) a location will cause that location to feel closer.”

202 volunteers were recruited at the Bay Street subway station in Toronto, Ontario, Canada. All participants were asked to rate the subjective distance of another subway station on the line that they were traveling; the station was either coming up (e.g., the next stop) or just past (e.g., the previous stop).

Model: 2 x 4 between-subject ANOVA.

Refactoring and contrasts

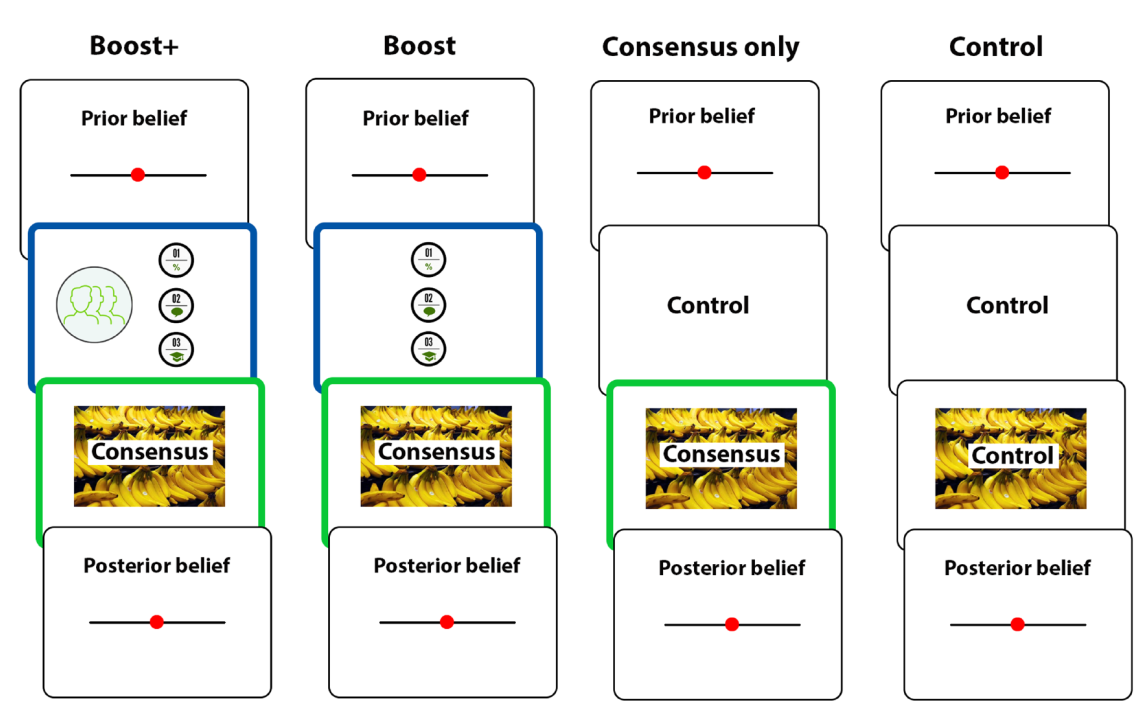

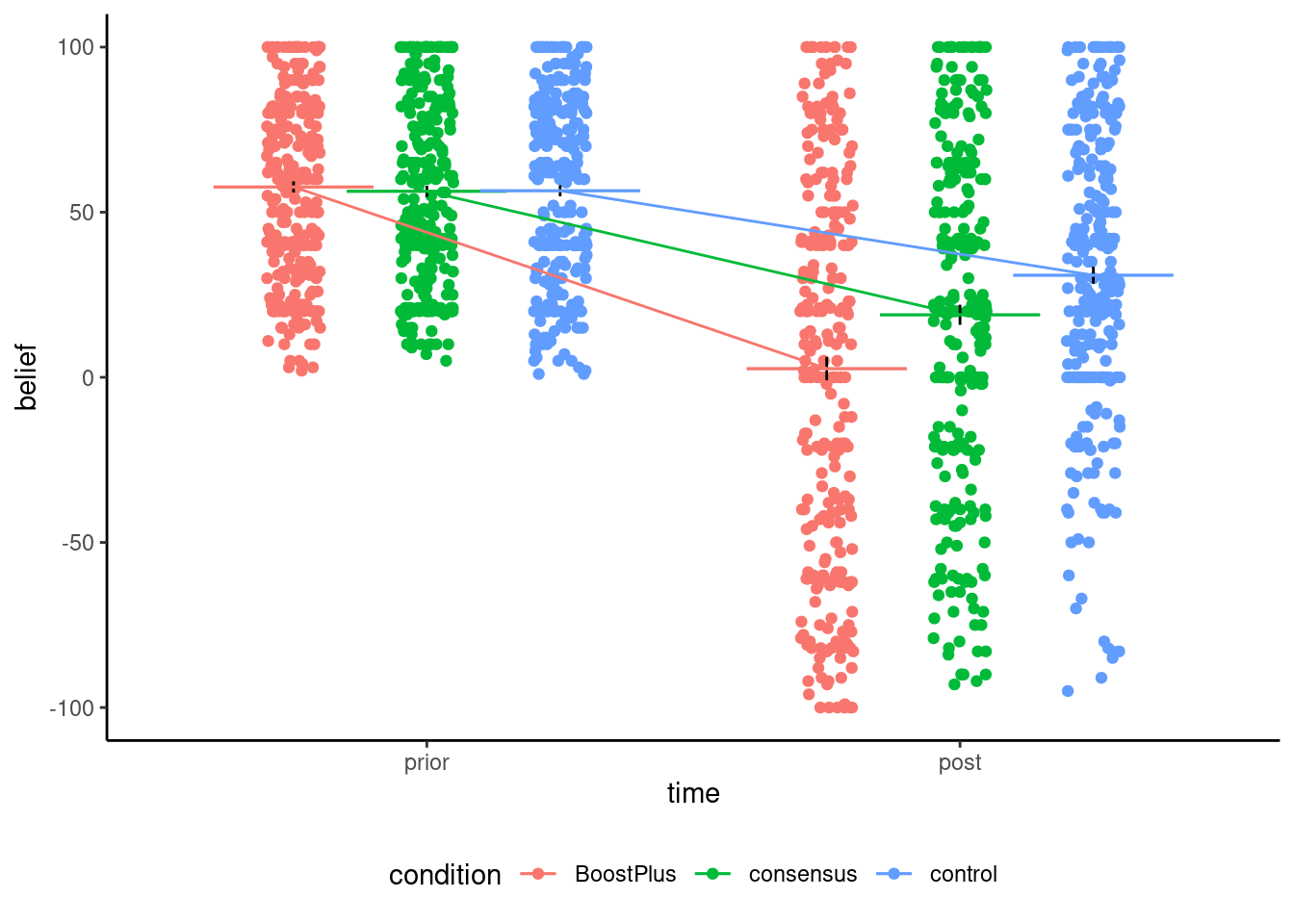

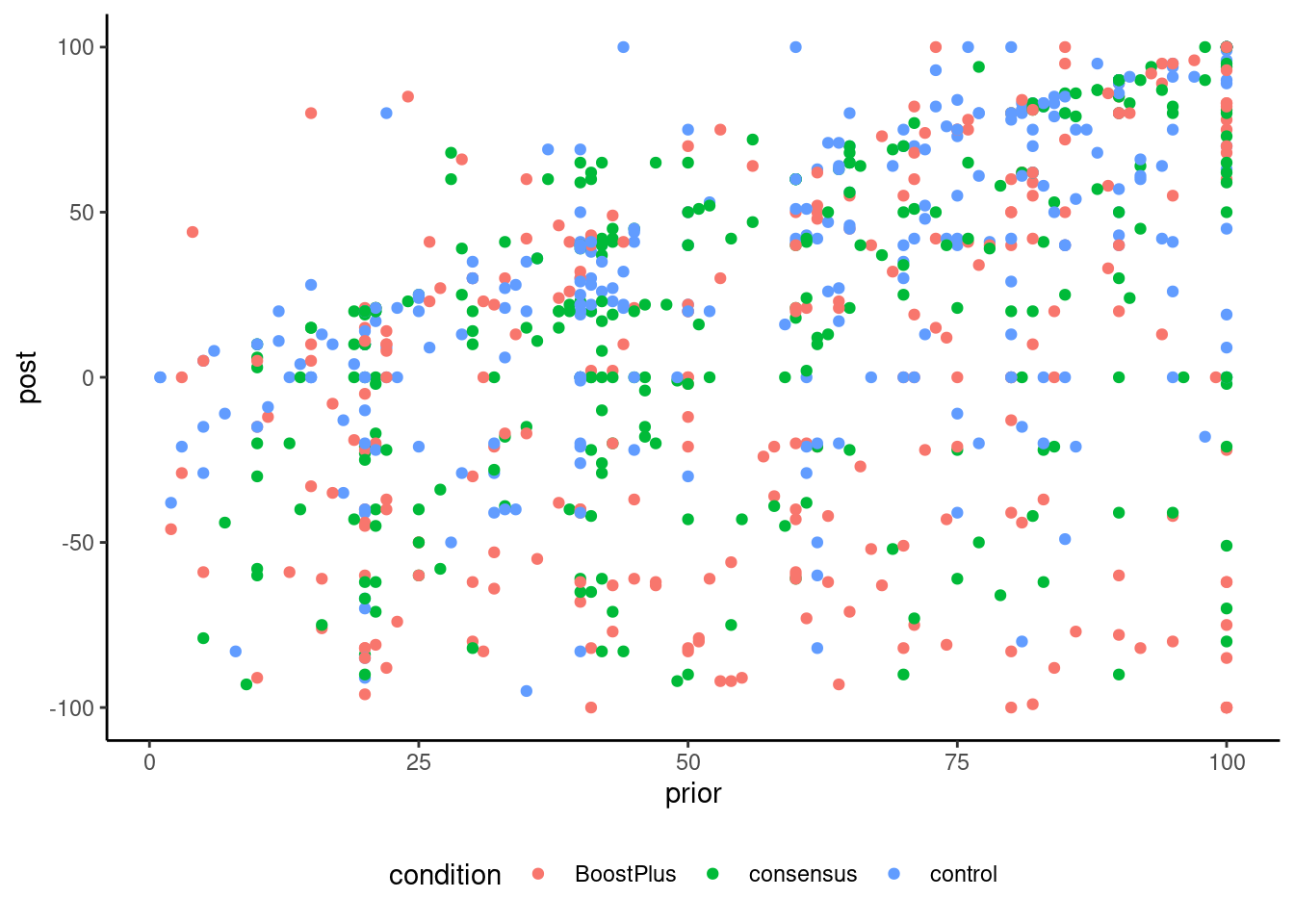

5. Boosting understanding of scientific consensus to correct false beliefs

Stekelenburg et al. (2021)

In three experiments with more than 1,500 U.S. adults who held false beliefs, participants first learned the value of scientific consensus and how to identify it. Subsequently, they read a news article with information about a scientific consensus opposing their beliefs.

Model: 3 group between-subject ANCOVA

Belief of harm of genetically engineered food

Preregisted, choice of topic (Study 1 on climate change produced no difference), second study underpowered, power calculation, scale of measurement and response, population heterogeneity

Choose your battles

- Methodology course, students must learn programming on the fly (via assignments and based on examples, I provide recordings with notebooks).

- I allow for both R and SPSS, but this complicates in-class presentation and management.

Maximally uninteresting

Often, results section formatting is terse and procedural. Students find them intimidating (akin to an unknown foreign language).

Students must nevertheless learn to criticize statistical methodology and spot potential problems.

Peer-review task

- Select papers that are suitable, but offer students a choice

- level of difficulty

- only methods and concepts covered in class

- access to data!

- Narrow the choice if there are multiple experiments

- Ask them to focus only on statistical aspects

Spot the problems

A single factor (Levels of social presence: No vs. Medium vs. High) within-subjects design was used. In total, \(\color{#0072ce}{335}\) participants recruited on Amazon Mechanical Turk (MTurk.com) completed an online survey.

A repeated measure ANOVA with social presence (no, medium, and high) as the independent variable and positive emotional reaction as the dependent variable revealed a significant main effect \((F({\color{#0072ce}{1, 240}})=19.115, p<0.001)\).

Linear regressions with random intercepts to account for repeated measures were performed. Results show that both positive emotional reaction \((t({\color{#0072ce}{2135}}) = 14.722;\) \(\beta=0.308,\) \(p<0.0001\) […]

The good, the bad and the ugly

Degrees of freedom do not match with reported sample size or methods.

Digging into the data reveals an incomplete unbalanced design

- between 3 and 10 measurements per participant, mode 6.

- out of 335 participants, 94 did not see all three categories!

Analysis in SPSS kept only complete cases!

The degrees of freedom reveal that the author used ordinary linear regression (no accounting for repeated measures).

Weekly exercises

- Textbook or simulated data works as well, but lack motivation and often the messy features of real data.

- Difficulty of examples and questions: follow Goldilock’s principle

- they needs to be easy for first exposition,

- but hard enough to relate to problem set.

Lessons learned the hard way

- Some students try to just ‘get the answer right’, without learning the method.

- There can be built up frustration, especially for people with low programming proficiency (steep learning curve)

- Despite examples, unequal use of literal reporting (Quarto) (lots of unformatted output and copy-paste).

Free to use

References

6. Social presence in social media products

Poirier et al. (2024)