10 Variational inference

Variational inference, whose name comes from calculus of variation, considers approximations to the posterior \(p(\boldsymbol{\theta} \mid \boldsymbol{y})\) by a member from a family of distributions whose density or mass function is \(g(\cdot; \boldsymbol{\psi})\) with parameters \(\boldsymbol{\psi}.\) The objective of inference is thus to find the parameters that minimize some metric that measure discrepancy between the true postulated posterior and the approximation: doing so leads to optimization problems. Variational inference is widespread in machine learning and in large problems where Markov chain Monte Carlo might be infeasible.

This chapter is organized as follows: we first review notions of model misspecification and the Kullback–Leibler divergence. We then consider approximation schemes and some examples involving mixtures and model selection where analytical derivations are possible: these show how variational inference differs from Laplace approximation and she light on some practical aspects. Good references include Chapter 10 of Bishop (2006); most modern application use automatic differentiation variational inference (ADVI, Kucukelbir et al. (2017)) or stochastic optimization via black-box variational inference.

10.1 Kullback–Leibler divergence and evidence lower bound

Consider \(g(\boldsymbol{\theta};\boldsymbol{\psi})\) with \(\boldsymbol{\psi} \in \mathbb{R}^J\) an approximating density function whose integral is one over \(\boldsymbol{\Theta} \subseteq \mathbb{R}^p\) and whose support includes that of \(p(\boldsymbol{y}, \boldsymbol{\theta})\) over \(\boldsymbol{\Theta}.\) Suppose data were generated from a model with true density \(f_t\) and we consider instead the family of distributions \(g(\cdot; \boldsymbol{\psi}),\) the latter may or not contain \(f_t\) as special case. Intuitively, if we were to estimate the model by maximum likelihood, we expect that the model returned will be the one closest to \(f_t\) among those considered in some sense.

Definition 10.1 (Kullback–Leibler divergence) The Kullback–Leibler divergence between densities \(f_t(\cdot)\) and \(g(\cdot; \boldsymbol{\psi}),\) is \[\begin{align*} \mathsf{KL}(f_t \parallel g) &=\int \log \left(\frac{f_t(\boldsymbol{x})}{g(\boldsymbol{x}; \boldsymbol{\psi})}\right) f_t(\boldsymbol{x}) \mathrm{d} \boldsymbol{x}\\ &= \int \log f_t(\boldsymbol{x}) f_t(\boldsymbol{x}) \mathrm{d} \boldsymbol{x} - \int \log g(\boldsymbol{x}; \boldsymbol{\psi}) f_t(\boldsymbol{x}) \mathrm{d} \boldsymbol{x} \\ &= \mathsf{E}_{f_t}\{\log f_t(\boldsymbol{X})\} - \mathsf{E}_{f_t}\{\log g(\boldsymbol{X}; \boldsymbol{\psi})\} \end{align*}\] where the subscript of the expectation indicates which distribution we integrate over. The term \(-\mathsf{E}_{f_t}\{\log f_t(\boldsymbol{X})\}\) is called the entropy of the distribution. The divergence is strictly positive unless \(g(\cdot; \boldsymbol{\psi}) \equiv f_t(\cdot).\) Note that, by construction, it is not symmetric.

The Kullback–Leibler divergence is central to study of model misspecification: if we fit \(g(\cdot)\) when data arise from \(f_t,\) the maximum likelihood estimator of the parameters \(\widehat{\boldsymbol{\psi}}\) will be the value of the parameter that minimizes the Kullback–Leibler divergence \(\mathsf{KL}(f_t \parallel g);\) this value will be positive unless the model is correctly specified and \(g(\cdot; \widehat{\boldsymbol{\psi}}) = f_t(\cdot).\) See Davison (2003), pp. 122–125 for a discussion.

In the Bayesian setting, interest lies in approximating \(f_t \equiv p(\boldsymbol{\theta} \mid \boldsymbol{y}).\) The problem of course is that we cannot compute expectations with respect to the posterior since these requires knowledge of the marginal likelihood, which acts as a normalizing constant, in most settings of interest. What we can do instead is to consider the model that minimizes the reverse Kullback–Leibler divergence \[\begin{align*} g(\boldsymbol{\theta}; \widehat{\boldsymbol{\psi}}) = \mathrm{argmin}_{\boldsymbol{\psi}} \mathsf{KL}\{g(\boldsymbol{\theta};\boldsymbol{\psi}) \parallel p(\boldsymbol{\theta} \mid \boldsymbol{y})\}. \end{align*}\] We will show soon that this is a sensible objective function.

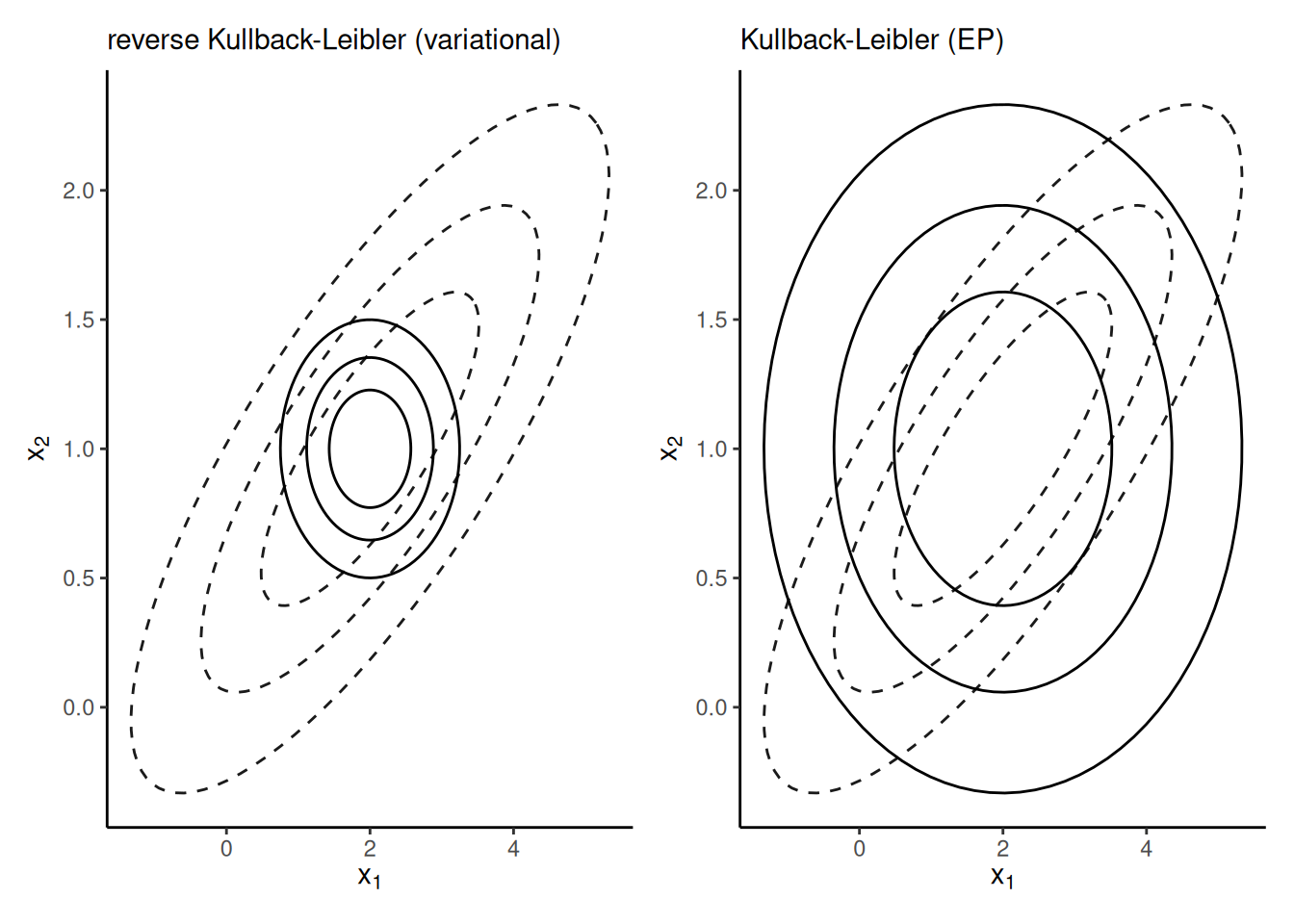

Remark 10.1 (Lack of symmetry of Kullback–Leibler divergence). Consider an approximation of a bivariate Gaussian vector with correlated components \(\boldsymbol{X} \sim \mathsf{Gauss}_2(\boldsymbol{\mu}, \boldsymbol{\Sigma})\). If we approximate each margin independently with a univariate Gaussian, then the density which minimizes $ \[\mathsf{KL}\{ \phi_2(\boldsymbol{\mu}, \boldsymbol{\Sigma}) \parallel \phi(\mu_{1g}, \sigma^2_{1g})\phi(\mu_{2g}, \sigma^2_{2g})\},\] the forward Kullback–Leibler divergence will have the same marginal mean \(\widehat{\mu}_{ig}=\mu_i\) and variance \(\sigma^2_{ig} = \Sigma_{ii}\), whereas the best approximation that minimizes the reverse Kullback–Leibler \[\mathsf{KL}\{ \phi(\mu_{1g}, \sigma^2_{1g})\phi(\mu_{2g}, \sigma^2_{2g}) \parallel \phi_2(\boldsymbol{\mu}, \boldsymbol{\Sigma})\},\] will have the same mean, but a variance equal to the conditional variance of one component given the other, e.g., \(\widehat{\sigma}_{1g} = \Sigma_{11} - \Sigma_{12} \Sigma_{22}^{-1}\Sigma_{21}.\) Figure 10.1 shows the two approximations: the reverse Kullback–Leibler approximation is much too narrow and only gives mass where both variables have positive density, whereas the approximation that minimizes Kullback–Leibler divergence puts mass everywhere the target is supported.

Before proceeding with variational inference objective function, we recall basic definitions on convexity, which underly Jensen’s inequality.

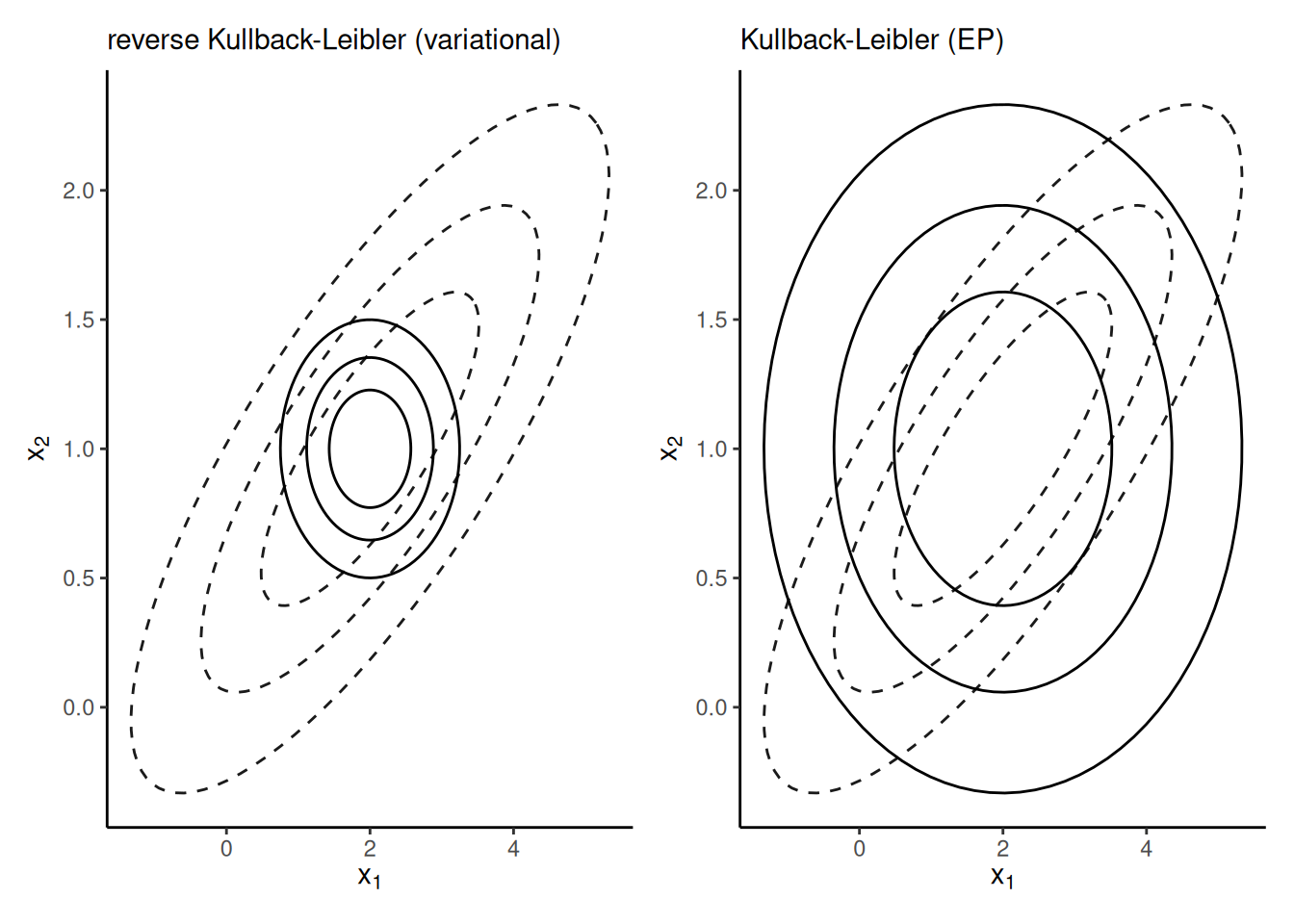

Definition 10.2 (Convex function) A real-valued function \(h: \mathbb{R} \to \mathbb{R}\) is convex if for any \(x_1,x_2 \in \mathbb{R}\) if any linear combination of \(x_1\) and \(x_2\) satisfies \[\begin{align*} h(tx_1 + (1-t)x_2) \leq t h(x_1) + (1-t) h(x_2), \qquad 0 \leq t \leq 1 \end{align*}\] The left panel of Figure 10.2 shows an illustration of the fact that the chord between any two points lies above the function. Examples include the exponential function, or a quadratic \(ax^2+bx + c\) with \(a>0.\)

Consider now the problem of approximating the marginal likelihood, sometimes called the evidence, \[\begin{align*} p(\boldsymbol{y}) = \int_{\boldsymbol{\Theta}} p(\boldsymbol{y}, \boldsymbol{\theta}) \mathrm{d} \boldsymbol{\theta}. \end{align*}\] where we only have the joint \(p(\boldsymbol{y}, \boldsymbol{\theta})\) is the product of the likelihood times the prior. The marginal likelihood is typically intractable, or very expensive to compute, but it is necessary to calculate probability and various expectations with respect to the posterior unless we draw samples from it.

We can rewrite the marginal likelihood as \[\begin{align*} p(\boldsymbol{y}) = \int_{\boldsymbol{\Theta}} \frac{p(\boldsymbol{y}, \boldsymbol{\theta})}{g(\boldsymbol{\theta};\boldsymbol{\psi})} g(\boldsymbol{\theta};\boldsymbol{\psi}) \mathrm{d} \boldsymbol{\theta}. \end{align*}\] With convex functions, Jensen’s inequality implies that \(h\{\mathsf{E}(X)\} \leq \mathsf{E}\{h(X)\},\) and applying this with \(h(x)=-\log(x),\) we get \[\begin{align*} -\log p(\boldsymbol{y}) = -\log \left\{\int_{\boldsymbol{\Theta}} p(\boldsymbol{y}, \boldsymbol{\theta}) \mathrm{d} \boldsymbol{\theta}\right\} \leq - \log \int_{\boldsymbol{\Theta}} \left(\frac{p(\boldsymbol{y}, \boldsymbol{\theta})}{g(\boldsymbol{\theta};\boldsymbol{\psi})}\right) g(\boldsymbol{\theta};\boldsymbol{\psi}) \mathrm{d} \boldsymbol{\theta}. \end{align*}\]

We can get a slightly different take if we consider the reformulation \[\begin{align*} \mathsf{KL}\{g(\boldsymbol{\theta};\boldsymbol{\psi}) \parallel p(\boldsymbol{\theta} \mid \boldsymbol{y})\} = \mathsf{E}_{g}\{\log g(\boldsymbol{\theta})\} - \mathsf{E}_g\{\log p(\boldsymbol{y}, \boldsymbol{\theta})\} + \log p(\boldsymbol{y}). \end{align*}\] Instead of minimizing the Kullback–Leibler divergence, we can thus equivalently maximize the so-called evidence lower bound (ELBO) \[\begin{align*} \mathsf{ELBO}(g) = \mathsf{E}_g\{\log p(\boldsymbol{y}, \boldsymbol{\theta})\} - \mathsf{E}_{g}\{\log g(\boldsymbol{\theta})\} \end{align*}\] The ELBO as an objective function balances between two terms: the first term is the expected value of the joint posterior under the approximating density \(g,\) which will be maximized by taking a distribution placing all mass at the maximum of \(p(\boldsymbol{y}, \boldsymbol{\theta}),\) whereas the second term can be viewed as a penalty for the entropy of the approximating family, which rewards distributions which are diffuse. We thus try to maximize the evidence, subject to a regularization term.

The ELBO is a lower bound for the marginal likelihood because the Kullback–Leibler divergence is non-negative and \[\begin{align*} \log p(\boldsymbol{y}) = \mathsf{ELBO}(g) + \mathsf{KL}\{g(\boldsymbol{\theta};\boldsymbol{\psi}) \parallel p(\boldsymbol{\theta} \mid \boldsymbol{y})\}. \end{align*}\] If we could estimate the marginal likelihood of a (typically simpler) competing alternative and the lower bound on the evidence in favour of the more complex model was very much larger, then we could use this but generally there is no theoretical guarantee for model comparison if we compare two lower evidence lower bounds. The purpose of variational inference is that approximations to expectations, credible intervals, etc. are obtained from \(g(\cdot; \boldsymbol{\psi})\) instead of \(p(\cdot).\)

Example 10.1 (Variational inference vs Laplace approximation) The Laplace approximation differs from the Gaussian variational approximation; The right panel of Figure 10.2 shows a skew-Gaussian distribution with location zero, unit scale and a skewness parameter of \(\alpha=10;\) it’s density is \(2\phi(x)\Phi(\alpha x).\)

The Laplace approximation is easily obtained by numerical maximization; the mode is the mean of the resulting approximation, with a std. deviation that matches the square root of the reciprocal Hessian of the negative log density.

Consider an approximation with \(g\) the density of \(\mathsf{Gauss}(m, s^2);\) we obtain the parameters by maximizing the ELBO. The entropy term for a Gaussian approximating density is \[\begin{align*} -\mathsf{E}_g(\log g) = \frac{1}{2}\log(2\pi \sigma^2) + \frac{1}{2s^2}\mathsf{E}_g\left\{(X-m)^2 \right\} = \frac{1}{2} \left\{1+\log(2\pi s^2)\right\} \end{align*}\] given \(\mathsf{E}_g\{(x-m)^2\}=s^2\) by definition of the variance. Ignoring constants terms that do not depend on the parameters of \(g,\) optimization of the ELBO amounts to maximization of \[\begin{align*} &\mathrm{argmax}_{m, s^2} \left[-\frac{1}{2} \mathsf{E}_g \left\{ \frac{(X-\mu)^2}{\sigma^2}\right\} + \mathsf{E}_g\left\{\log \Phi(\alpha X)\right\} + \log(s^2) \right] \\ &\quad =\mathrm{argmax}_{m, s^2} \left[ -\frac{1}{2} \mathsf{E}_g \left\{ \frac{(X-\mu)^2}{\sigma^2}\right\} + \mathsf{E}_g\left\{\log \Phi(\alpha X)\right\} + \log(s^2) \right] \\&\quad =\mathrm{argmax}_{m, s^2} \left[ -\frac{s^2 + m^2 -2\mu m}{2\sigma^2} + \log(s^2) + \mathsf{E}_{Z}\left\{\log \Phi(\alpha sX+m)\right\} \right] \end{align*}\] where \(Z \sim \mathsf{Gauss}(0,1).\) We can approximate the last term by Monte Carlo with a single sample (recycled at every iteration) and use this to find the optimal parameters. The right panel of Figure 10.2 shows that the resulting approximation aligns with the bulk. It of course fails to capture the asymmetry, since the approximating function is symmetric.

10.2 Coordinate-ascent variational inference

Variational inference in itself does not determine the choice of approximating density \(g(\cdot; \boldsymbol{\psi});\) the quality of the approximation depends strongly on the latter. The user has ample choice to decide whether to use the fully correlated, factorized, or the mean-field approximation, along with the parametric family for each block. Note that the latter must be support dependent, as the Kullback–Leibler divergence will be infinite if the support of \(g\) does not include that of \(p(\boldsymbol{\theta} \mid \boldsymbol{y})\) (although we work with the reverse Kullback–Leibler).

There are two main approaches: the first is to start off with the model with a factorization of the density, and deduce the form of the most suitable parametric family for the approximation that will maximize the ELBO. This requires bespoke derivation of the form of the density and the conditionals for each model, and does not lead itself easily to generalizations. The second approach alternative is to rely on a generic family for the approximation, and an omnibus procedure for the optimization using a reformulation via stochastic optimization that approximates the integrals appearing in the ELBO formula.

Proposition 10.1 (Factorization) Factorizations of \(g(;\boldsymbol{\psi})\) into blocks with parameters \(\boldsymbol{\psi}_1, \ldots, \boldsymbol{\psi}_M,\) where \[\begin{align*} g(\boldsymbol{\theta}; \boldsymbol{\psi}) = \prod_{j=1}^M g_j(\boldsymbol{\theta}_j; \boldsymbol{\psi}_j) \end{align*}\] If we assume that each of the \(J\) parameters \(\theta_1, \ldots, \theta_J\) are independent, then we obtain a mean-field approximation. The latter will be poor if parameters are strongly correlated, as we will demonstrate later.

We use a factorization of \(g,\) and denote the components \(g_j(\cdot)\) for simplicity, omitting dependence on the parameters \(\boldsymbol{\psi}.\) We can write the ELBO as \[\begin{align*} \mathsf{ELBO}(g) &= \int \log p(\boldsymbol{y}, \boldsymbol{\theta}) \prod_{j=1}^M g_j(\boldsymbol{\theta}_j)\mathrm{d} \boldsymbol{\theta} - \sum_{j=1}^M \int \log \{ g_j(\boldsymbol{\theta}_j) \} g_j(\boldsymbol{\theta}_j) \mathrm{d} \boldsymbol{\theta}_j \\& = \idotsint \left\{\log p(\boldsymbol{y}, \boldsymbol{\theta}) \prod_{\substack{i \neq j \\j=1}}^M g_j(\boldsymbol{\theta}_j)\mathrm{d} \boldsymbol{\theta}_{-i}\right\} g_i(\boldsymbol{\theta}_i) \mathrm{d} \boldsymbol{\theta}_i \\& \quad - \sum_{j=1}^M \int \log \{ g_j(\boldsymbol{\theta}_j) \} g_j(\boldsymbol{\theta}_j) \mathrm{d} \boldsymbol{\theta}_j \\& \stackrel{\boldsymbol{\theta}_i}{\propto} \int \mathsf{E}_{-i}\left\{\log p(\boldsymbol{y}, \boldsymbol{\theta})\right\} g_i(\boldsymbol{\theta}_i) \mathrm{d} \boldsymbol{\theta}_i - \int \log \{ g_i(\boldsymbol{\theta}_i) \} g_i(\boldsymbol{\theta}_i) \mathrm{d} \boldsymbol{\theta}_i \end{align*}\] where the last line is a negative Kullback–Leibler divergence between \(g_i\) and \[\begin{align*} \mathsf{E}_{-i}\left\{\log p(\boldsymbol{y}, \boldsymbol{\theta})\right\} = \int \log p(\boldsymbol{y}, \boldsymbol{\theta}) \prod_{\substack{i \neq j \\j=1}}^M g_j(\boldsymbol{\theta}_j)\mathrm{d} \boldsymbol{\theta}_{-i} \end{align*}\] and the subscript \(-i\) indicates that we consider all but the \(i\)th component of the \(J\) vector.

In some cases, maximization of the ELBO is implicit. Indeed, since the Kullback–Leibler divergence is strictly non-negative and minimized if we take the same density, this reveals that the form of approximating density \(g_i\) that maximizes the ELBO occurs when both sides are equal, so is of the form \[\begin{align*} g^{\star}_i(\boldsymbol{\theta}_i) \propto \exp \left[ \mathsf{E}_{-i}\left\{\log p(\boldsymbol{y}, \boldsymbol{\theta})\right\}\right]. \end{align*}\] This expression is specified up to proportionality, but we can often look at the kernel of \(g^{\star}_i\) and deduce from it the normalizing constant, which is defined as the integral of the above. The posterior approximation will have a closed form expression if we consider cases of conditionally conjugate distributions in the exponential family: we can see that the optimal \(g^{\star}_j\) relates to the conditional since \(p(\boldsymbol{\theta}, \boldsymbol{y}) \stackrel{\boldsymbol{\theta}_i}{\propto} p(\boldsymbol{\theta}_i \mid \boldsymbol{\theta}_{-i}, \boldsymbol{y}).\) The connection to Gibbs sampling, which instead draws parameters from the conditional, reveals that problems that can be tackled by the latter method will be amenable to factorizations with approximating densities from known families.

If we consider maximization of the ELBO for \(g_i,\) we can see from the law of iterated expectation that the latter is proportional to \[\begin{align*} \mathsf{ELBO}(g_i) \propto \mathsf{E}_i \left[ \mathsf{E}_{-i} \{\log p(\boldsymbol{\theta}, \boldsymbol{y}) \}\right] - \mathsf{E}_i\{\log g_i(\boldsymbol{\theta}_i)\} \end{align*}\] Due to the nature of this conditional expectation, we can devise an algorithm to maximize the ELBO of the factorized approximation. Each parameter update depends on the other components, but the \(\mathsf{ELBO}(g_i)\) is concave. We can maximize \(g^{\star}_i\) in turn for each \(i=1, \ldots, M\) treating the other parameters as fixed, and iterate this scheme. The resulting approximation, termed coordinate ascent variational inference (CAVI), is guaranteed to monotonically increase the evidence lower bound until convergence to a local maximum; see Sections 3.1.5 and 3.2.4–3.2.5 of Boyd and Vandenberghe (2004). The scheme is a valid coordinate ascent algorithm. At each cycle, we compute the ELBO and stop the algorithm when the change is lower then some present numerical tolerance. Since the approximation may have multiple local optima, we can perform random initialization and keep the run with the highest ELBO value.

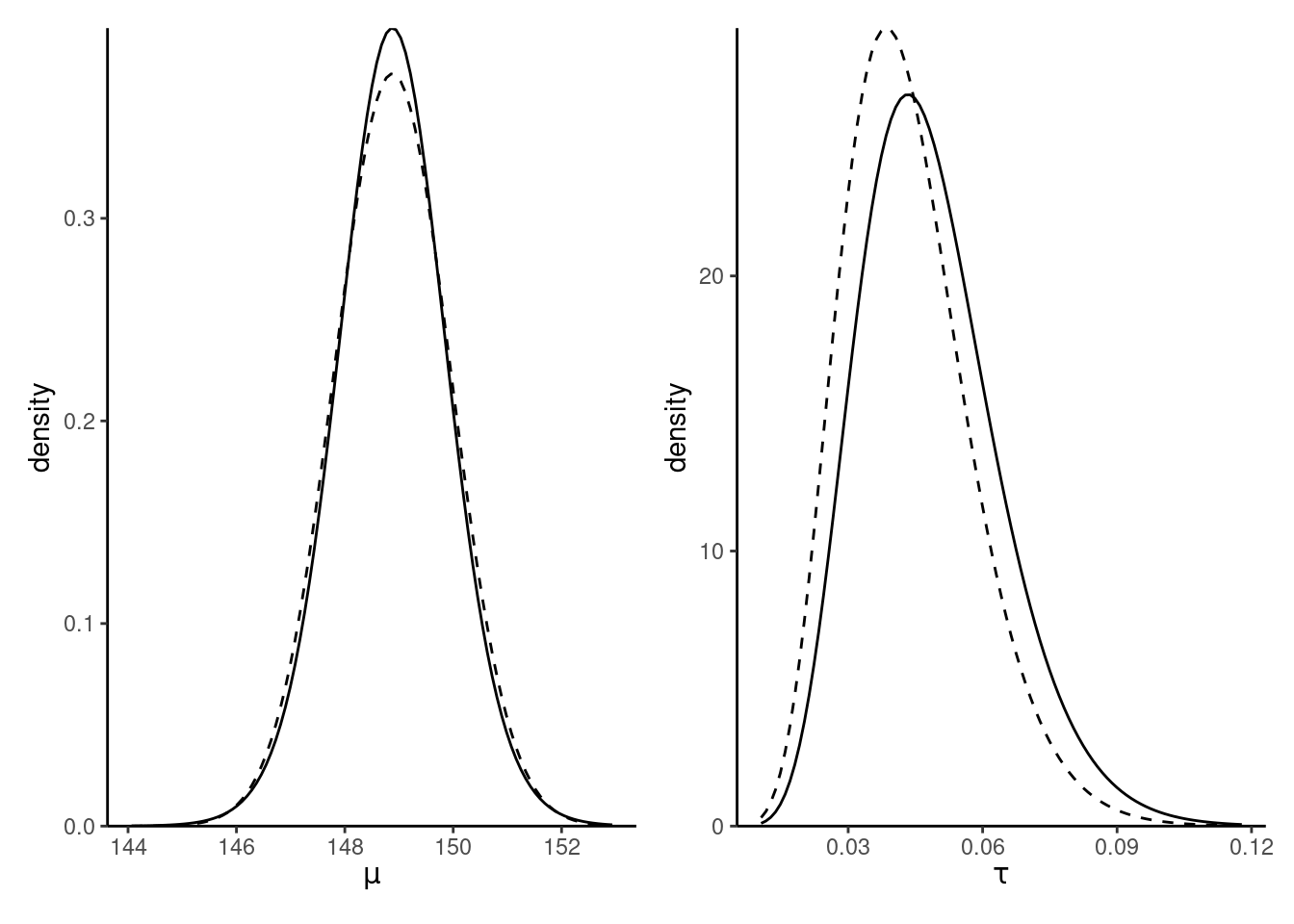

Example 10.2 (Variational approximation to Gaussian conjugate model) We consider Example 10.1.3 from Bishop (2006) for a variational approximation of a Gaussian distribution with conjugate priors parametrized in terms of precision, with \[\begin{align*} Y_i &\sim \mathsf{Gauss}(\mu, \tau^{-1}), \qquad i =1, \ldots, n;\\ \mu &\sim \mathsf{Gauss}\{\mu_0, (\tau\tau_0)^{-1}\} \\ \tau &\sim \mathsf{gamma}(a_0, b_0). \end{align*}\] This is an example where the marginal posteriors are available in closed-form, \[\begin{align*} \tau \mid \boldsymbol{y} &\sim \mathsf{gamma}\left\{ a_0 + \frac{n-1}{2}, b_0 + \frac{\sum_{i=1}^n y_i^2 + (n+\tau_0)^{-1}(n\overline{y}+\tau_0)^2}{2}\right\} \\ \mu \mid \boldsymbol{y} &\sim \mathsf{Student}\left\{ \frac{n\overline{y}+\tau_0\mu_0}{n+\tau_0}, \frac{2b_0 +(n-1)s^2 + \tau_0n(\overline{y}-\mu_0)^2/(\tau_0+n)}{(n+2a_0)(\tau_0+n)}, 2a_0+n\right\} \end{align*}\] so we can compare our approximation with the truth. We assume a factorization of the variational approximation \(g_\mu(\mu)g_\tau(\tau);\) the factor for \(g_\mu\) is proportional to \[\begin{align*} \log g^{\star}_{\mu}(\mu) \propto -\frac{\mathsf{E}_{\tau}(\tau)}{2} \left\{ \sum_{i=1}^n (y_i-\mu)^2 + \tau_0(\mu-\mu_0)^2\right\}, \end{align*}\] which is quadratic in \(\mu\) and thus must be Gaussian with precision \(\tau_n = \mathsf{E}_{\tau}(\tau)(n + \tau_0)\) and mean \((n + \tau_0)^{-1}\{\tau_0\mu_0 + n\overline{y}\}\) using Proposition 8.1, where \(n\overline{y} = \sum_{i=1}^n y_i.\) We could also note that this corresponds (up to expectation) to \(p(\mu \mid \tau, \boldsymbol{y}).\) As the sample size increase, the approximation converges to a Dirac delta (point mass at the sample mean. The optimal precision factor satisfies \[\begin{align*} \ln g^{\star}_{\tau}(\tau) &\propto \log \tau\left(\frac{n+1}{2} + a_0-1\right) \\&\quad- \tau \left[b_0 + \frac{\mathsf{E}_{\mu}\left\{\sum_{i=1}^n (y_i-\mu)^2\right\}}{2} + \frac{\tau_0\mathsf{E}_{\mu}\left\{(\mu-\mu_0)^2\right\}}{2}\right]. \end{align*}\] This is the form as \(p(\tau \mid \mu, \boldsymbol{y}),\) namely a gamma with shape \(a_n =(n+1)/2 + a_0\) and rate \(b_n\) given by the term in the square brackets. It is helpful to rewrite the expected value as \[\begin{align*} \mathsf{E}_{\mu}\left\{\sum_{i=1}^n (y_i-\mu)^2\right\} = \sum_{i=1}^n \{y_i - \mathsf{E}_{\mu}(\mu)\}^2 + n \mathsf{Var}_{\mu}(\mu), \end{align*}\] so that it depends on the parameters of the distribution of \(\mu\) directly. We can then apply the coordinate ascent algorithm. Derivation of the ELBO, even in this toy setting, is tedious. \[\begin{align*} \mathsf{ELBO}(g) & = a_0\log(b_0)-\log \Gamma(a_0) +(a_0-1)\mathsf{E}_{\tau}(\log \tau)- b_0\mathsf{E}_{\tau}(\tau)\\ & \quad - \frac{n+1}{2} \log(2\pi) + \frac{\log(\tau_0) + (n+1)\mathsf{E}_{\tau}(\log \tau)}{2} \\&\quad - \frac{\mathsf{E}_{\tau}(\tau)\tau_0}{2} \left[\{\mu_0 - \mathsf{E}_{\mu}(\mu)\}^2 + \mathsf{Va}_{\mu}(\mu)\right] \\&\quad - \frac{\mathsf{E}_{\tau}(\tau)}{2} \left[ \sum_{i=1}^n \left\{y_i - \mathsf{E}_{\mu}(\mu)\right\}^2 + n \mathsf{Va}_{\mu}(\mu)\right] \\ &\quad - a_n \log (b_n) + \log \Gamma(a_n) - (a_n-1) \mathsf{E}_{\tau}(\log \tau) + b_n \mathsf{E}_{\tau}(\tau) \\& \quad + \frac{1 + \log(2\pi\mathsf{Va}_{\mu}(\mu))}{2} \\&= a_0\log(b_0)-\log \Gamma(a_0) -a_n\log b_n + \log \Gamma(a_n) \\& \quad - \frac{n}{2}\log(2\pi)+ \frac{1 + \log (\tau_0/\tau_n)}{2} \end{align*}\] The expected value of \(\mathsf{E}_{\tau}(\tau) = a_n/b_n\) and the mean and variance of the Gaussian are given by it’s parameters. The terms involving \(\mathsf{E}_{\tau}(\log \tau) = \psi(a_n)-\log b_n\) cancel out.

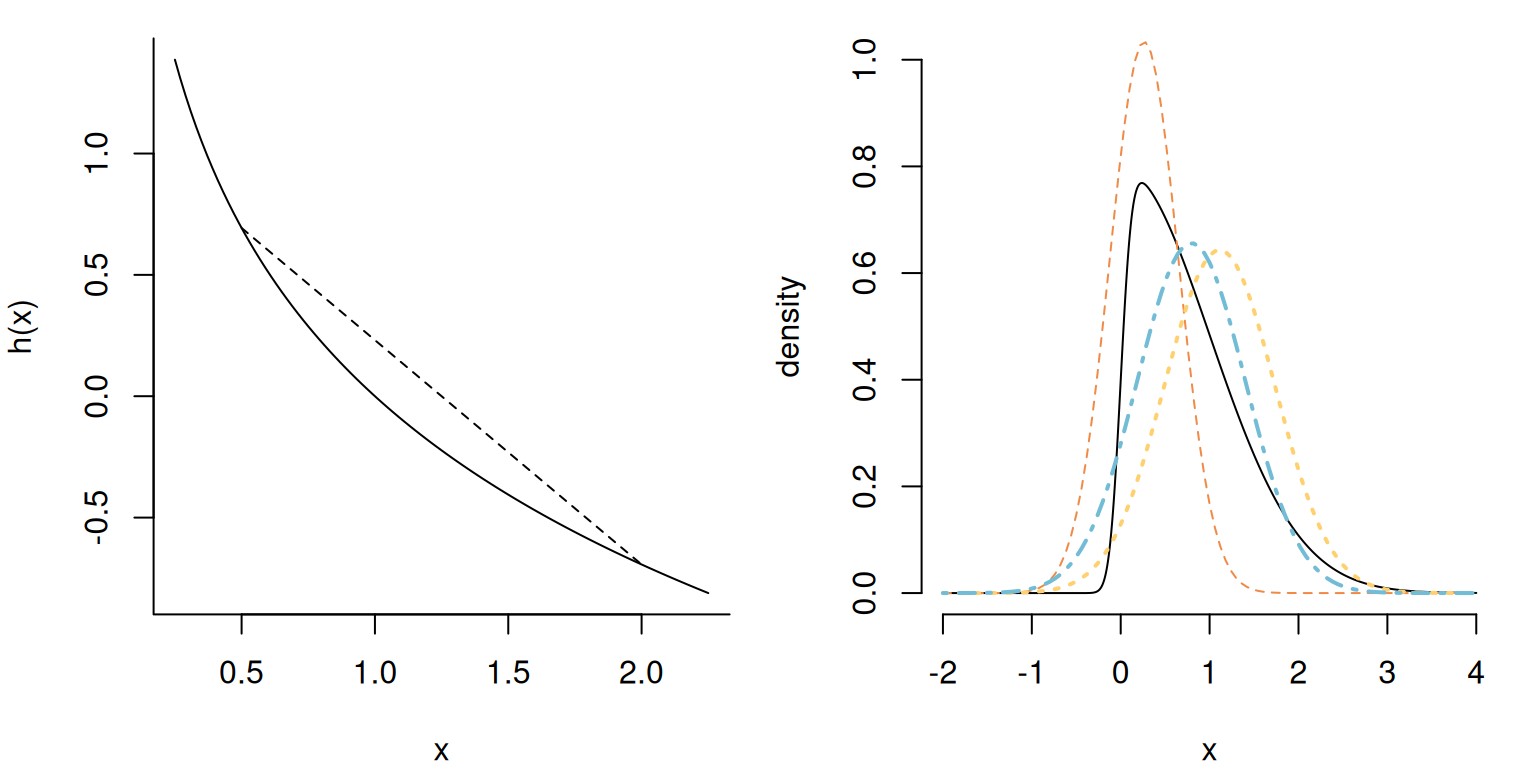

Figure 10.3 shows the contour plots of the true posterior (since the prior is conjugate) and the coordinate-ascent variational mean-field approximation. We can see that both are centered around the same values, but the independence assumption does not reflect the dependence and the CAVI approximation is slightly less dispersed.

Remark 10.2 (Approximation of latent variables). While we have focused on exposition with only parameters \(\boldsymbol{\theta},\) this can be generalized by including latent variables \(\boldsymbol{U}\) as in Section 6.1 in addition to the model parameters \(\boldsymbol{\theta}\) as part of the variational modelling.

Example 10.3 (CAVI for probit regression) A probit regression is a generalized linear model with probability of success \(\Phi(\mathbf{x}_i\boldsymbol{\beta}),\) where \(\Phi(\cdot)\) is the cumulative distribution function of a standard Gaussian variable. If we assign a Gaussian prior on the \(\boldsymbol{\beta}\), we can write the model as \[\begin{align*} p(\boldsymbol{y} \mid \boldsymbol{\beta}) = \Phi(\mathbf{X}\boldsymbol{\beta})^{\boldsymbol{y}}\Phi(-\mathbf{X}\boldsymbol{\beta})^{\boldsymbol{1}_n -\boldsymbol{y}} \end{align*}\] since \(1-\Phi(x) = \Phi(-x).\) Consider the data augmentation of Example 6.2, with auxiliary variables \(Z_i \mid \boldsymbol{\beta}\sim \mathsf{Gauss}(\mathbf{x}_i\boldsymbol{\beta}, 1).\)

With \(\boldsymbol{\beta} \sim \mathsf{Gauss}_p(\boldsymbol{\mu}_0, \mathbf{Q}_0^{-1}),\) the model admits conditionals \[\begin{align*} \boldsymbol{\beta} \mid \boldsymbol{z} &\sim \mathsf{Gauss}_p\left\{(\mathbf{X}^\top\mathbf{X} + \mathbf{Q_0})^{-1}(\mathbf{X}\boldsymbol{z} + \mathbf{Q}_0\boldsymbol{\mu}_0), (\mathbf{X}^\top\mathbf{X} + \mathbf{Q_0})^{-1} \right\} \\ Z_i \mid y_i, \boldsymbol{\beta} &\sim \mathsf{trunc. Gauss}(\mathbf{x}_i\boldsymbol{\beta}, 1, l_i, u_i) \end{align*}\] where \([l_i, u_i]\) is \((-\infty,0)\) if \(y_i=0\) and \((0, \infty)\) if \(y_i=1.\) If we consider a factorization of the form \(g_{\boldsymbol{Z}}(\boldsymbol{z})g_{\boldsymbol{\beta}}(\boldsymbol{\beta}),\) then we exploit the conditionals in the same way as for Gibbs sampling, but substituting unknown parameter functionals by their expectations. Furthermore, the optimal form of the density further factorizes as \(g_{\boldsymbol{Z}}(\boldsymbol{z}) = \prod_{i=1}^n g_{Z_i}(z_i).\)

The model depends on the mean parameter of \(\boldsymbol{Z}\), say \(\mu_{\boldsymbol{Z}}\), and that of \(\boldsymbol{\beta},\) say \(\mu_{\boldsymbol{\beta}}.\) To see this, consider the terms in the posterior proportional to \(Z_i\), where \[\begin{align*} p(z_i \mid \boldsymbol{\beta}, y_i) \propto -\frac{z_i^2 - 2z_i \mathbf{x}_i\boldsymbol{\beta}}{2} \times \mathrm{I}(z_i >0)^{y_i}\mathrm{I}(z_i <0)^{1-y_i} \end{align*}\] which is linear in \(\boldsymbol{\beta}\). The expectation of a univariate truncated Gaussian \(Z \sim \mathsf{trunc. Gauss}(\mu,\sigma^2, l, u)\) is \[\begin{align*} \mathsf{E}(Z) = \mu - \sigma\frac{\phi\{(u-\mu/\sigma)\} - \phi\{(l-\mu/\sigma)\}}{\Phi\{(u-\mu/\sigma)\} - \Phi\{(l-\mu/\sigma)\}}. \end{align*}\] If we replace \(\mu=\mathbf{x}_i\mu_{\boldsymbol{\beta}}\) in this expression, we get the update \[\begin{align*} \mu_{Z_i}(z_i) = \begin{cases} \mathbf{x}_i\mu_{\boldsymbol{\beta}} - \frac{ \phi(\mathbf{x}_i\mu_{\boldsymbol{\beta}})}{1-\Phi(\mathbf{x}_i\mu_{\boldsymbol{\beta}})} & y_i = 0;\\ \mathbf{x}_i\mu_{\boldsymbol{\beta}} + \frac{ \phi(\mathbf{x}_i\mu_{\boldsymbol{\beta}})}{\Phi(\mathbf{x}_i\mu_{\boldsymbol{\beta}})} & y_i = 1, \end{cases} \end{align*}\] since \(\phi(x)=\phi(-x).\)

The optimal form for \(\boldsymbol{\beta}\) is Gaussian and the only unknown parameter is \(\boldsymbol{\mu}_{\boldsymbol{\beta}};\) the log density only involves a linear form for \(\boldsymbol{Z}\), so follows from the same principle. We get regrouping the vector of means for the latent variables \[\begin{align*} \boldsymbol{\mu}_{\boldsymbol{\beta}} = (\mathbf{X}^\top\mathbf{X} + \mathbf{Q_0})^{-1}(\mathbf{X}\boldsymbol{\mu}_{\boldsymbol{Z}} + \mathbf{Q}_0\boldsymbol{\mu}_0) \end{align*}\]

Starting with an initial vector for either parameters, the CAVI algorithm alternatives between updates of \(\boldsymbol{\mu}_{\boldsymbol{\beta}}\) and \(\boldsymbol{\mu}_{\boldsymbol{Z}}\) until the value of the evidence lower bound stabilizes.

# Data augmentation: "z" is latent Gaussian,

# truncated to be negative for failures

# and positive for successes

cavi_probit <- function(

y, # response vector (0/1)

X, # model matrix

prior_beta_prec = diag(rep(0.01, ncol(X))),

prior_beta_mean = rep(0, ncol(X)),

maxiter = 1000L,

tol = 1e-4

) {

# Precompute fixed quantity

sc <- solve(crossprod(X) + prior_beta_prec)

pmu_prior <- prior_beta_prec %*% prior_beta_mean

n <- length(y) # number of observations

stopifnot(nrow(X) == n)

mu_z <- rep(0, n)

y <- as.logical(y)

ELBO <- numeric(maxiter)

lcst <- -0.5 *

log(det(solve(prior_beta_prec) %*% crossprod(X) + diag(ncol(X))))

for (b in seq_len(maxiter)) {

mu_b <- c(sc %*% (t(X) %*% mu_z + pmu_prior))

lp <- c(X %*% mu_b)

mu_z <- lp + dnorm(lp) / (pnorm(lp) - ifelse(y, 0, 1))

ELBO[b] <- sum(pnorm(lp, lower.tail = y, log.p = TRUE)) -

lcst - 0.5 *

c(t(mu_b - prior_beta_mean) %*%

prior_beta_prec %*%

(mu_b - prior_beta_mean))

if (b > 2 && (ELBO[b] - ELBO[b - 1]) < tol) {

break

}

}

list(mu_z = mu_z, mu_beta = mu_b, elbo = ELBO[1:b])

}We consider for illustration purposes data from Experiment 2 of Duke and Amir (2023) on the effect of sequential decisions and purchasing formats. We fit a model with age of the participant and the binary variable format, which indicate the experimental condition. The model is fitted with a sum-to-zero constraint for the dummy variable.

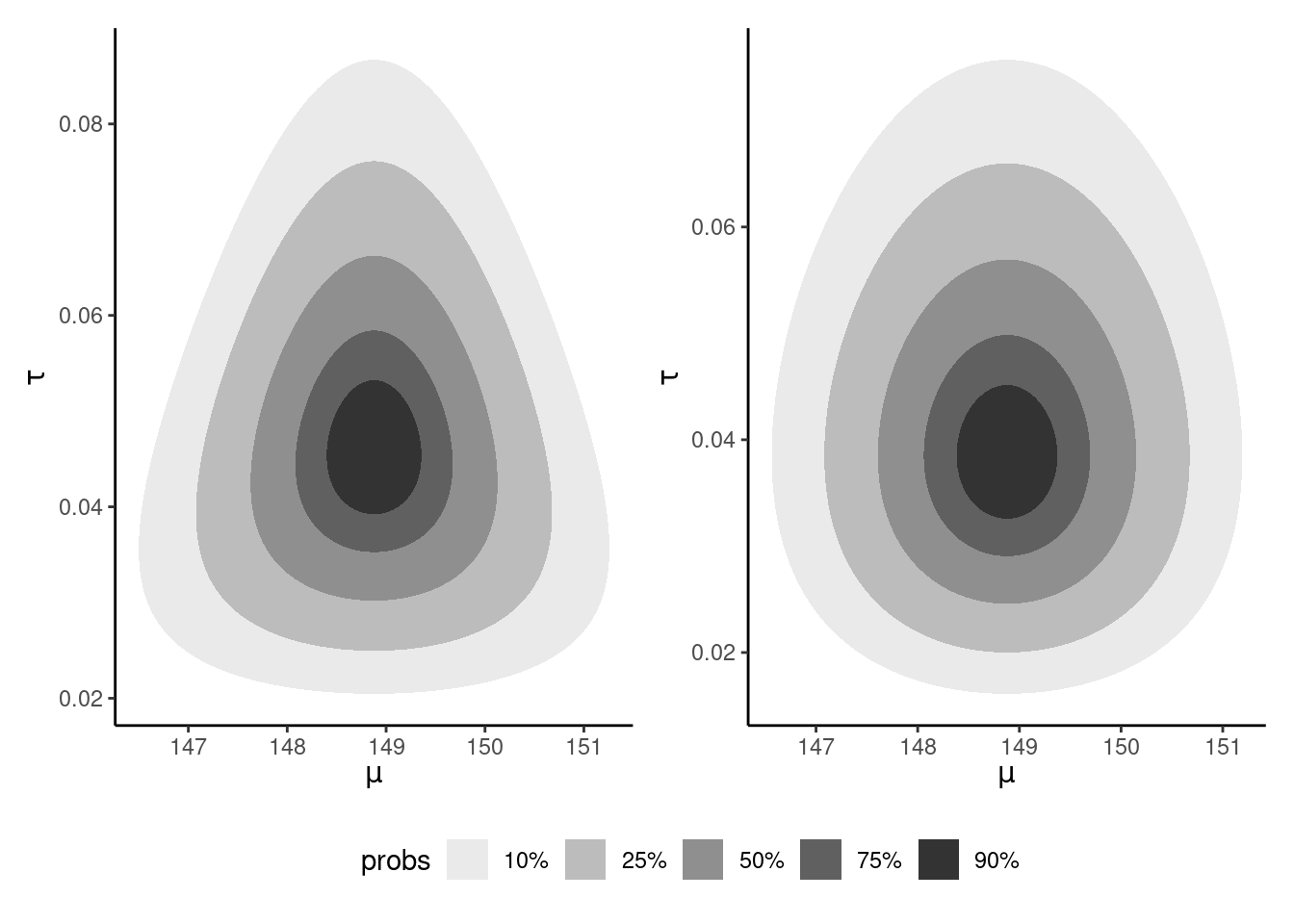

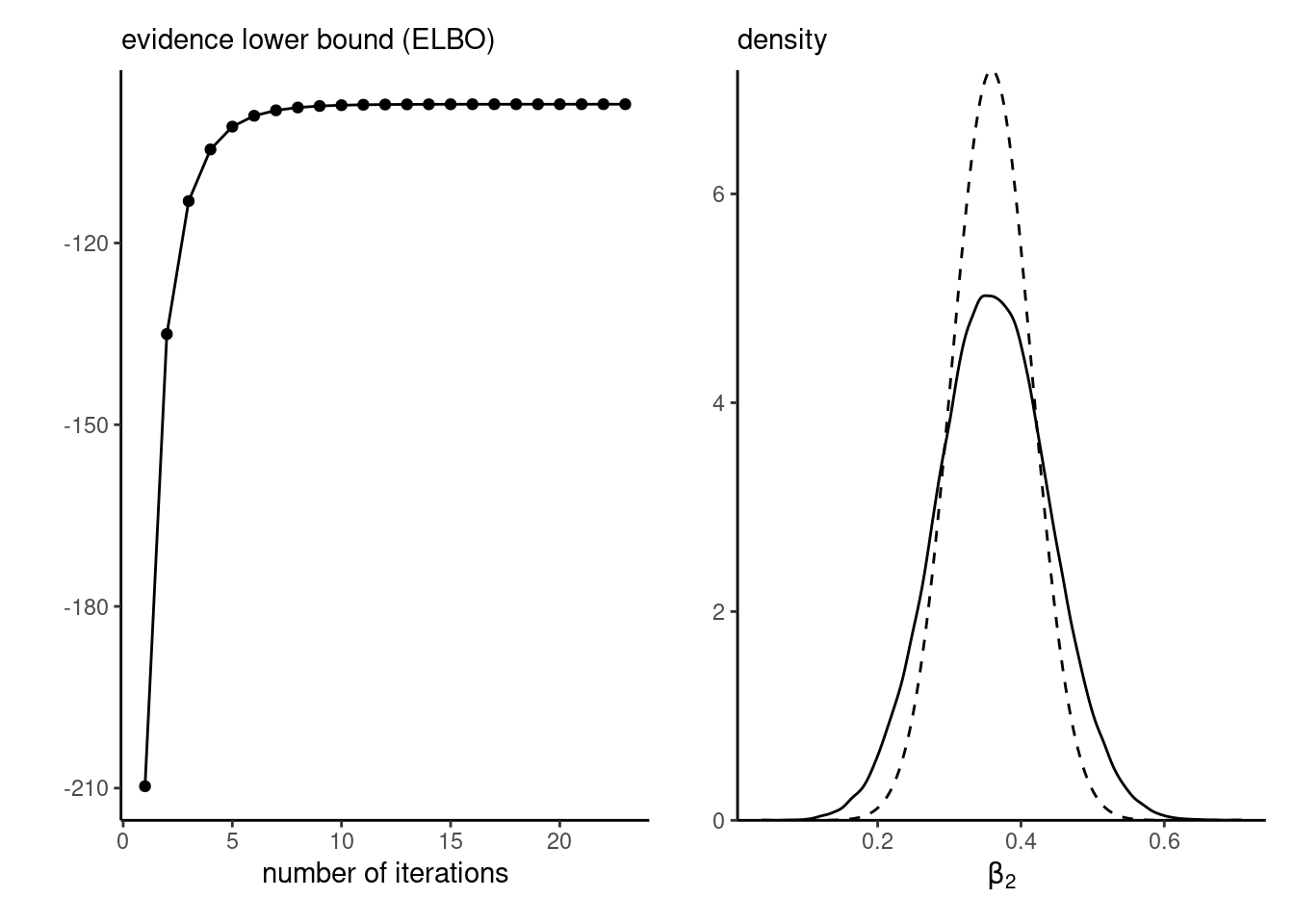

With vague priors, the coefficients for the mean \(\boldsymbol{\mu}_{\boldsymbol{\beta}}=(\beta_0, \beta_1, \beta_2)^\top\) matches the frequentist point estimates of the probit regression to four significant digits. Convergence is very fast, as shown by the ELBO plot in the left panel of Figure 10.5. The posterior density approximation implied by variational inference, shown on the right panel of Figure 10.5, is once again underdispersed relative to the true marginal posterior.

Given the strong requirements for getting tractable conditionals, coordinate-ascent variational inference is mostly useful in ultra high-dimensional settings where Markov chain Monte Carlo algorithms are not viable. This is the case notable for regression models with the discrete spike-and-slab of Definition 8.2, models for image processing or topic modelling using latent Dirichlet allocations.

10.3 Automatic differentiation variational inference

We consider alternative numeric schemes which rely on stochastic optimization. The key idea behind these methods is that we can use gradient-based algorithms, and approximate the expectations with respect to \(g\) by drawing samples from the approximating densities. This gives rises to a general omnibus procedure for optimization, although some schemes capitalize on the structure of the approximating family. Hoffman et al. (2013) consider stochastic gradient for exponential families mean-field approximations, using natural gradients to device an algorithm. While efficient within this context, it is not a generic algorithm.

Ranganath, Gerrish, and Blei (2014) extend this to more general distributions by noting that the gradient of the ELBO, interchanging the derivative and the integral using the dominated convergence theorem, is \[\begin{align*} \frac{\partial}{\partial \boldsymbol{\psi}} \mathsf{ELBO}(g) &= \int g(\boldsymbol{\theta}; \boldsymbol{\psi}) \frac{\partial}{\partial \boldsymbol{\psi}} \log \left( \frac{p(\boldsymbol{\theta}, \boldsymbol{y})}{g(\boldsymbol{\theta}; \boldsymbol{\psi})}\right) \mathrm{d} \boldsymbol{\theta} + \int \frac{\partial g(\boldsymbol{\theta}; \boldsymbol{\psi})}{\partial \boldsymbol{\psi}} \times \log \left( \frac{p(\boldsymbol{\theta}, \boldsymbol{y})}{g(\boldsymbol{\theta}; \boldsymbol{\psi})}\right) \mathrm{d} \boldsymbol{\theta} \\& = \int \frac{\partial \log g(\boldsymbol{\theta}; \boldsymbol{\psi})}{\partial \boldsymbol{\psi}} \times \log \left( \frac{p(\boldsymbol{\theta}, \boldsymbol{y})}{g(\boldsymbol{\theta}; \boldsymbol{\psi})}\right) g(\boldsymbol{\theta}; \boldsymbol{\psi})\mathrm{d} \boldsymbol{\theta} \end{align*}\] where \(p(\boldsymbol{\theta}, \boldsymbol{y})\) does not depend on \(\boldsymbol{\psi}\), and \[\begin{align*} \int \frac{\partial \log g(\boldsymbol{\theta}; \boldsymbol{\psi})}{\partial \boldsymbol{\psi}} g(\boldsymbol{\theta}; \boldsymbol{\psi}) \mathrm{d} \boldsymbol{\theta} & = \int \frac{\partial g(\boldsymbol{\theta}; \boldsymbol{\psi})}{\partial \boldsymbol{\psi}} \mathrm{d} \boldsymbol{\theta} \\&= \frac{\partial}{\partial \boldsymbol{\psi}}\int g(\boldsymbol{\theta}; \boldsymbol{\psi}) \mathrm{d} \boldsymbol{\theta} = 0. \end{align*}\] The integral of a density is one regardless of the value of \(\boldsymbol{\psi}\), so it’s derivative vanishes. We are left with the expected value \[\begin{align*} \mathsf{E}_{g}\left\{\frac{\partial \log g(\boldsymbol{\theta}; \boldsymbol{\psi})}{\partial \boldsymbol{\psi}} \times \log \left( \frac{p(\boldsymbol{\theta}, \boldsymbol{y})}{g(\boldsymbol{\theta}; \boldsymbol{\psi})}\right)\right\} \end{align*}\] which we can approximate via Monte Carlo by drawing samples from \(g\). Ranganath, Gerrish, and Blei (2014) provide two methods to reduce the variance of this expression using control variates and Rao–Blackwellization, as excessive variance hinders the convergence and requires larger Monte Carlo sample sizes to be reliable. In the context of large samples of independent observations, we can also resort to mini-batching, by randomly selecting a subset of observations.

We can use the Monte Carlo approximation to derive a stochastic gradient algorithm.

Proposition 10.2 (Stochastic gradient descent) Consider \(f(\boldsymbol{\theta})\) a differentiable function with gradient \(\nabla f(\boldsymbol{\theta})\) and \(\rho_t\) a Robbins–Munro sequence of step sizes satisfying \(\sum_{t=1}^\infty \rho_t = \infty\) and \(\sum_{t=1}^\infty \rho^2_t< \infty.\)

To maximize \(f(\boldsymbol{\theta})\), we construct a series of first-order approximations starting from \(\boldsymbol{\theta}^{(0)}\) with \[\begin{align*} \boldsymbol{\theta}^{(t)} = \boldsymbol{\theta}^{(t-1)} +\rho_t \mathsf{E}\left\{\nabla f(\boldsymbol{\theta}^{(t-1)})\right\}. \end{align*}\] where the expected value is evaluated via Monte Carlo, until changes in \(\|\boldsymbol{\theta}_{t} - \boldsymbol{\theta}_{t-1}\|\) is less than some tolerance value.

The speed of convergence of the stochastic gradient descent depends on multiple factors:

- the properties of the function (here the ELBO) under evaluation. Good performance is obtained for log concave distributions.

- the level of noise of the gradient estimator. Less noisy gradient estimators are preferable.

- good starting values, as the algorithm converges to a local maximum and may struggle if we are in a region where the objective function is flat.

- the Robbins–Munro sequence used for the step size, as the steps may lead to divergences unless we have a global concave objective function.

The second point is worth some further discussion. Some families of distributions, notably location-scale (cf. Definition 1.12) and exponential families (Definition 1.13) are particularly convenient, because we can get expressions for the ELBO that are simpler and lead to less noisy gradient estimators. For exponential families approximating distributions, we have sufficient statistics \(S_k \equiv t_k(\boldsymbol{\theta})\) and the gradient of \(\log g\) becomes \(S_k\) under mean-field.

Kucukelbir et al. (2017) proposes a stochastic gradient algorithm, but with two main innovations. The first is the general use of Gaussian approximating densities for factorized density, with parameter transformations to map from the support of \(T: \boldsymbol{\Theta} \mapsto \mathbb{R}^p\) via \(T(\boldsymbol{\theta})=\boldsymbol{\zeta}.\) We then consider an approximation \(g(\boldsymbol{\zeta}; \boldsymbol{\psi})\) where \(\boldsymbol{\psi}\) consists of mean parameters \(\boldsymbol{\mu}\) and covariance \(\boldsymbol{\Sigma}\), parametrized in terms of independent components via \(\boldsymbol{\Sigma}=\mathsf{diag}\{\exp(\boldsymbol{\omega})\}\) or through a decomposition of the covariance \(\boldsymbol{\Sigma} = \mathbf{LL}^\top\). The full approximation is of course more flexible when the transformed parameters \(\boldsymbol{\zeta}\) are correlated, but is more expensive to compute than the mean-field approximation. The change of variable introduces a Jacobian term for the approximation to the density \(p(\boldsymbol{\theta}, \boldsymbol{y}).\) Another benefit is that the Gaussian entropy admits a closed-form solution that depends only on the covariance, so is not random.

Proposition 10.3 (Entropy of Gaussian) Write the entropy of the multivariate Gaussian for \(g\) the density of \(\mathsf{Gauss}_p(\boldsymbol{\mu}, \boldsymbol{\Sigma})\) as \[\begin{align*} - \mathsf{E}_g(\log g) &= \frac{D\log(2\pi) + \log|\boldsymbol{\Sigma}|}{2} - \frac{1}{2}\mathsf{E}_g\left\{ (\boldsymbol{X}-\boldsymbol{\mu})^\top\boldsymbol{\Sigma}^{-1}(\boldsymbol{X}-\boldsymbol{\mu})\right\} \\& = \frac{D\log(2\pi) + \log|\boldsymbol{\Sigma}|}{2} - \frac{1}{2}\mathsf{E}_g\left[ \mathsf{tr}\left\{(\boldsymbol{X}-\boldsymbol{\mu})^\top\boldsymbol{\Sigma}^{-1}(\boldsymbol{X}-\boldsymbol{\mu})\right\}\right] \\& = \frac{D\log(2\pi) + \log|\boldsymbol{\Sigma}|}{2} - \frac{1}{2}\mathsf{tr}\left[\boldsymbol{\Sigma}^{-1}\mathsf{E}_g\left\{(\boldsymbol{X}-\boldsymbol{\mu})(\boldsymbol{X}-\boldsymbol{\mu})^\top\right\}\right] \\& =\frac{D+D\log(2\pi) + \log |\boldsymbol{\Sigma}|}{2}. \end{align*}\] This follows from taking the trace of a \(1\times 1\) matrix, and applying a cyclic permutation to which the trace is invariant. Since the trace is a linear operator, we can interchange the trace with the expected value. Finally, we have \(\mathsf{E}_g\left\{(\boldsymbol{X}-\boldsymbol{\mu})(\boldsymbol{X}-\boldsymbol{\mu})^\top\right\}=\boldsymbol{\Sigma}\), and the trace of a \(D \times D\) identity matrix is simply \(D\).

The transformation to \(\mathbb{R}^p\) is not unique and different choices may yield to differences, but the choice of optimal transformation requires knowledge of the true posterior, which is thus intractable.

We give next some details about derivation of the full-rank approximation. We work with the matrix-log of the covariance matrix, defined through it’s eigendecomposition (or singular value decomposition) \(\boldsymbol{\Sigma}=\mathbf{V}\mathrm{diag}(\boldsymbol{\lambda})\mathbf{V}^{\top},\) where \(\mathbf{V}\) is a \(p\times p\) orthogonal matrix of eigenvectors, whose inverse is equal to it’s transpose. Most operations on the matrix only affect the eigenvalues \(\lambda_1, \ldots, \lambda_p\): the matrix-log is \[\begin{align*} \mathbf{M} =\mathbf{V}\mathrm{diag}\left\{ \frac{1}{2}\log(\boldsymbol{\lambda})\right\}\mathbf{V}^\top. \end{align*}\] Other operations on matrices are defined analogously: we write \(\exp(\boldsymbol{\Sigma}) = \mathbf{V}\mathrm{diag}\{ \exp(\boldsymbol{\lambda})\}\mathbf{V}^\top\) and we write \(\log(\boldsymbol{\Sigma}) = \mathbf{V}\mathrm{diag}\{ \log(\boldsymbol{\lambda})\}\mathbf{V}^\top\). and \(\boldsymbol{\Sigma} = \exp(2\mathbf{M})\).

We focus on the case of full transformation; the derivation for independent components is analogous. Since the Gaussian is a location-scale family, we can rewrite the model in terms of a standardized Gaussian variable \(\boldsymbol{Z}\sim \mathsf{Gauss}_p(\boldsymbol{0}_p, \mathbf{I}_p)\) where \(\boldsymbol{\zeta} = \boldsymbol{\mu} + \exp(\mathbf{M})\boldsymbol{Z}.\) The ELBO with the transformation is \[\begin{align*} \mathsf{ELBO}(g) & = \mathsf{E}_{\boldsymbol{Z}}\left[ \log p\left\{\boldsymbol{y}, T^{-1}(\boldsymbol{\zeta})\right\} + \log \left|\mathbf{J}_{T^{-1}}(\boldsymbol{\zeta})\right|\right] \\&\quad+\frac{D}{2}\{1+\log(2\pi)\} + \mathrm{trace}(\mathbf{M}). \end{align*}\] where we have the logarithm of the absolute value of the determinant of the Jacobian of the transformation due to the change of variable. If we apply the chain rule \[\begin{align*} \frac{\partial}{\partial \boldsymbol{\psi}}\log p\{\boldsymbol{y}, T^{-1}(\boldsymbol{\mu} + \exp(\mathbf{M})\boldsymbol{Z})\} = \frac{\partial \log p(\boldsymbol{y}, \boldsymbol{\theta})}{\partial \boldsymbol{\theta}} \frac{\partial T^{-1}(\boldsymbol{\zeta})}{\partial \boldsymbol{\zeta}} \frac{\partial \boldsymbol{\mu} + \exp(\mathbf{M})\boldsymbol{Z}}{\partial \boldsymbol{\psi}} \end{align*}\] and we retrieve the gradients of the ELBO with respect to the mean and variance, which are \[\begin{align*} \frac{\partial \mathsf{ELBO}(g)}{\partial \boldsymbol{\mu}} &= \mathsf{E}_{\boldsymbol{Z}}\left\{\nabla p(\boldsymbol{y}, \boldsymbol{\theta})\right\} \\ \frac{\partial \mathsf{ELBO}(g)}{\partial \mathbf{M}} &= \mathsf{symm}\left( \mathsf{E}_{\boldsymbol{Z}}\left\{\nabla p(\boldsymbol{y}, \boldsymbol{\theta})\boldsymbol{Z}^\top\exp(\mathbf{M})\right\}\right) + \mathbf{I}_p. \end{align*}\] where \(\mathsf{symm}(\mathbf{X}) = (\mathbf{X} + \mathbf{X}^\top)/2\) is the symmetrization operator and \[\begin{align*} \nabla p(\boldsymbol{y}, \boldsymbol{\theta}) = \frac{\partial \log p(\boldsymbol{y}, \boldsymbol{\theta})}{\partial \boldsymbol{\theta}} \frac{\partial T^{-1}(\boldsymbol{\zeta})}{\partial \boldsymbol{\zeta}} + \frac{\partial \log \left|\mathbf{J}_{T^{-1}}(\boldsymbol{\zeta})\right|}{\partial \boldsymbol{\zeta}}. \end{align*}\]

Assume for simplicity that we have \(T(\boldsymbol{\zeta}) = \boldsymbol{\zeta}\) is the identity. We can rewrite the expression for the gradient with respect to the matrix-log as \[\begin{align*} \frac{\partial \mathsf{ELBO}(g)}{\partial \mathbf{M}} &= \mathsf{symm}\left[ \mathsf{E}_{\boldsymbol{Z}}\left\{\frac{\partial \log p\{\boldsymbol{y}, \boldsymbol{\theta}=\boldsymbol{\mu} + \exp(\mathbf{M})\boldsymbol{Z}\}}{\partial \boldsymbol{\theta}}\boldsymbol{Z}^\top\exp(\mathbf{M})\right\}\right] + \mathbf{I}_p. \\& = \mathsf{symm}\left( \mathsf{E}_{\boldsymbol{Z}}\left[\frac{\partial \log p\{\boldsymbol{y}, \boldsymbol{\theta}=\boldsymbol{\mu} + \exp(\mathbf{M})\boldsymbol{Z}\}}{\partial \boldsymbol{\theta}\partial \boldsymbol{\theta}^\top}\exp(2\mathbf{M})\right]\right) + \mathbf{I}_p. \end{align*}\] The first expression typically leads to a more noisy gradient estimator, but the second requires derivation of the Hessian matrix. The equivalence follows from Stein’s lemma, which can be proved by integration by part.

Proposition 10.4 (Stein’s lemma) Consider \(h: \mathbb{R}^d \to \mathbb{R}\) a differentiable function and integration with respect to \(\boldsymbol{X} \sim \mathsf{Gauss}_d(\boldsymbol{\mu}, \boldsymbol{\Sigma})\) such that the gradient is absolutely integrable, \(\mathsf{E}_{\boldsymbol{X}}\{|\nabla_i h(\boldsymbol{X})|\} < \infty\) for \(i=1, \ldots, d.\) Then (Liu 1994), \[\begin{align*} \mathsf{E}_{\boldsymbol{X}}\left\{h(\boldsymbol{X})(\boldsymbol{X}-\boldsymbol{\mu})\right\} = \boldsymbol{\Sigma}\mathsf{E}_{\boldsymbol{X}}\left\{\nabla h(\boldsymbol{X})\right\} \end{align*}\]

We can replace expectations by Monte Carlo estimation and apply stochastic gradient descent: for example, an estimator of the ELBO for the case with \(\boldsymbol{\theta} = \boldsymbol{\eta} \in \mathbb{R}^p\) is \[\begin{align*} \frac{1}{B}\sum_{b=1}^B p\{\boldsymbol{y}, \boldsymbol{\theta} = \boldsymbol{\mu} + \exp(\mathbf{M})\boldsymbol{Z}_i\} + \frac{p}{2}\{\log(2\pi)+1\} + \mathrm{trace}(\mathbf{M}) \end{align*}\] for \(\boldsymbol{Z}_1, \ldots, \boldsymbol{Z}_b \sim \mathsf{Gauss}_p(\boldsymbol{0}_p, \mathbf{I}_p).\)

Compared to the black-box variational inference algorithm, this requires calculating the gradient of the log posterior with respect to \(\boldsymbol{\theta}.\) This step can be done using automatic differentiation (hence the terminology ADVI), and moreover this gradient estimator is several orders less noisy than the black-box counterpart. The ELBO can be approximated via Monte Carlo integration.

We can thus build a stochastic gradient algorithm with a Robins–Monro sequence of updates. Kucukelbir et al. (2017) use an adaptive step-size sequence \(\rho_t\) for the stochastic gradient descent, which we explicit: consider the \(k\)th entry of the gradient evaluate at step \(t\) by \(g_{k,t}\), with \[\begin{align*} \rho_{k,t} &= \eta\frac{ t^{-1/2+10^{-15}}}{\tau + \alpha s_{k,t}} \\ s^2_{k,t} &= \alpha g_{k,t}^2 + (1-\alpha)s^2_{k,t-1} = \alpha g_{k,t}^2 + (1-\alpha)\sum_{i=1}^{t-1} \alpha^{t-i} g_{k,i}^2 \end{align*}\] with \(s^2_{k,1}=g_{k,1}^2\) where the delay \(\tau>0\) is some positive constant (typically 1) to prevent division by zero and \(\eta>0\) controls the step size (smaller values may be required to avoid divergence) and \(0<\alpha<1\) controls the mixture. This parameter-specific scaling helps with updates of parameters on very different scales. For matrix parameters, updates from vectorization of the matrix entries and application of the diagonal sequence to each element separately.

The ADVI algorithm is implemented in Carpenter et al. (2017); see the manual for more details.